With chained DMA, the DMA controller works from a linked list in memory so that a complex chain of DMA requests requires no overhead from remote processors. DMA requests also support

Performance Metering Logic

The CN ASIC also provides

RACE++ Switch Fabric Interconnect

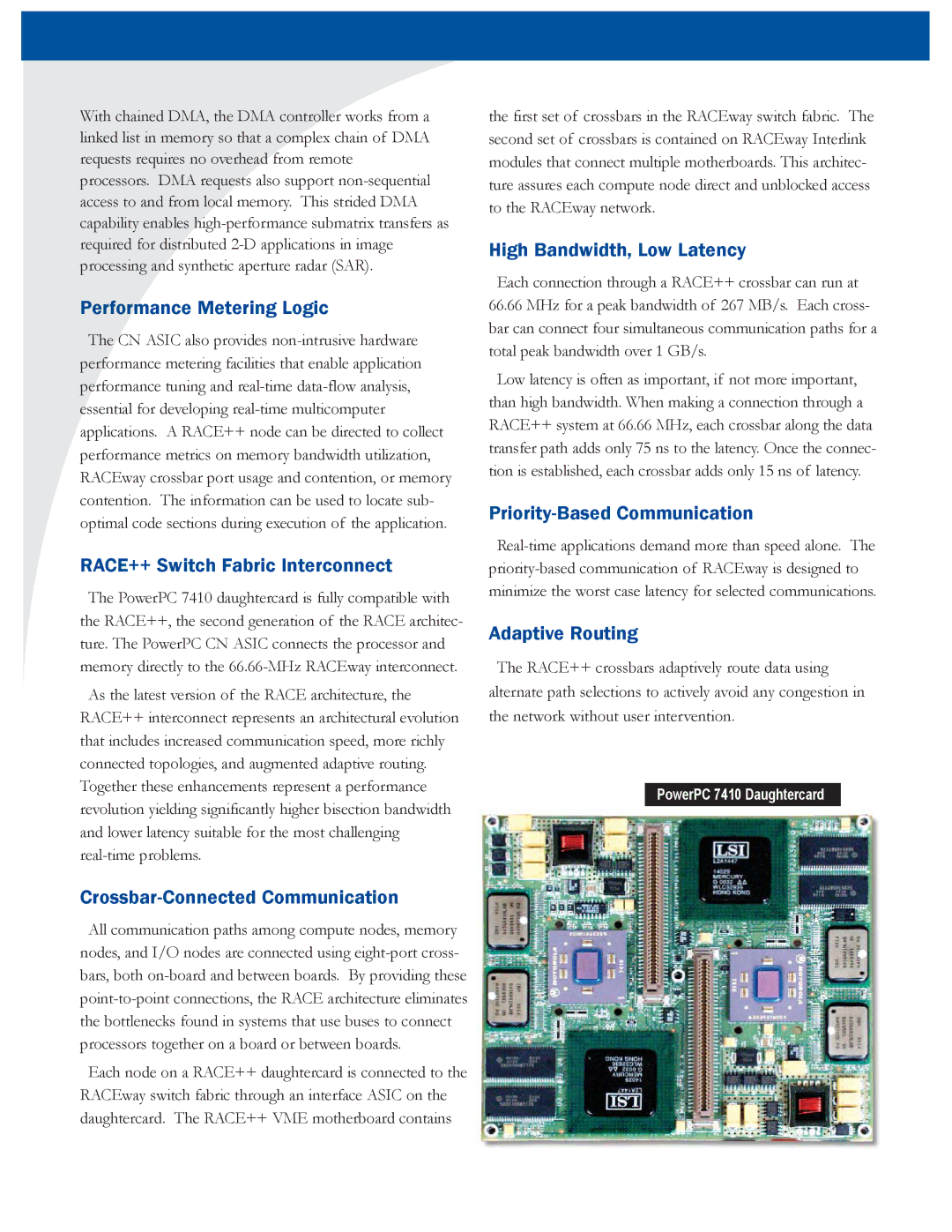

The PowerPC 7410 daughtercard is fully compatible with the RACE++, the second generation of the RACE architec- ture. The PowerPC CN ASIC connects the processor and memory directly to the

As the latest version of the RACE architecture, the RACE++ interconnect represents an architectural evolution that includes increased communication speed, more richly connected topologies, and augmented adaptive routing. Together these enhancements represent a performance revolution yielding significantly higher bisection bandwidth and lower latency suitable for the most challenging

Crossbar-Connected Communication

All communication paths among compute nodes, memory nodes, and I/O nodes are connected using

Each node on a RACE++ daughtercard is connected to the RACEway switch fabric through an interface ASIC on the daughtercard. The RACE++ VME motherboard contains

the first set of crossbars in the RACEway switch fabric. The second set of crossbars is contained on RACEway Interlink modules that connect multiple motherboards. This architec- ture assures each compute node direct and unblocked access to the RACEway network.

High Bandwidth, Low Latency

Each connection through a RACE++ crossbar can run at

66.66MHz for a peak bandwidth of 267 MB/s. Each cross- bar can connect four simultaneous communication paths for a total peak bandwidth over 1 GB/s.

Low latency is often as important, if not more important, than high bandwidth. When making a connection through a RACE++ system at 66.66 MHz, each crossbar along the data transfer path adds only 75 ns to the latency. Once the connec- tion is established, each crossbar adds only 15 ns of latency.

Priority-Based Communication

Adaptive Routing

The RACE++ crossbars adaptively route data using alternate path selections to actively avoid any congestion in the network without user intervention.

PowerPC 7410 Daughtercard