The overhead in maintaining the file cache can impact the performance of large parallel applications. Much of the overhead is associated with the sync() system call (by default, run every minute from the syncd daemon). The sync() system call scans all of the pages in the file cache to determine if any pages have been modified since the last sync(), and therefore need to be written to disk. This type of delay affects larger parallel applications more severely, and those with frequent synchronizing collective calls (such as MPI_ALLTOALL or MPI_BARRIER) are affected the most. A synchronizing operation like MPI_ALLTOALL can be completed only after the slowest task involved has reached it. Unless an effort is made to synchronize the sync daemons across a cluster, the sync() system call runs at different times across all of the LPARs. Unless the time between synchronizing operations for the application is large compared to the time required for a sync(), the random delays from sync() operations on many LPARs can slow the entire application. To address this problem, tune the file cache to reduce the amount of work each sync() must do..

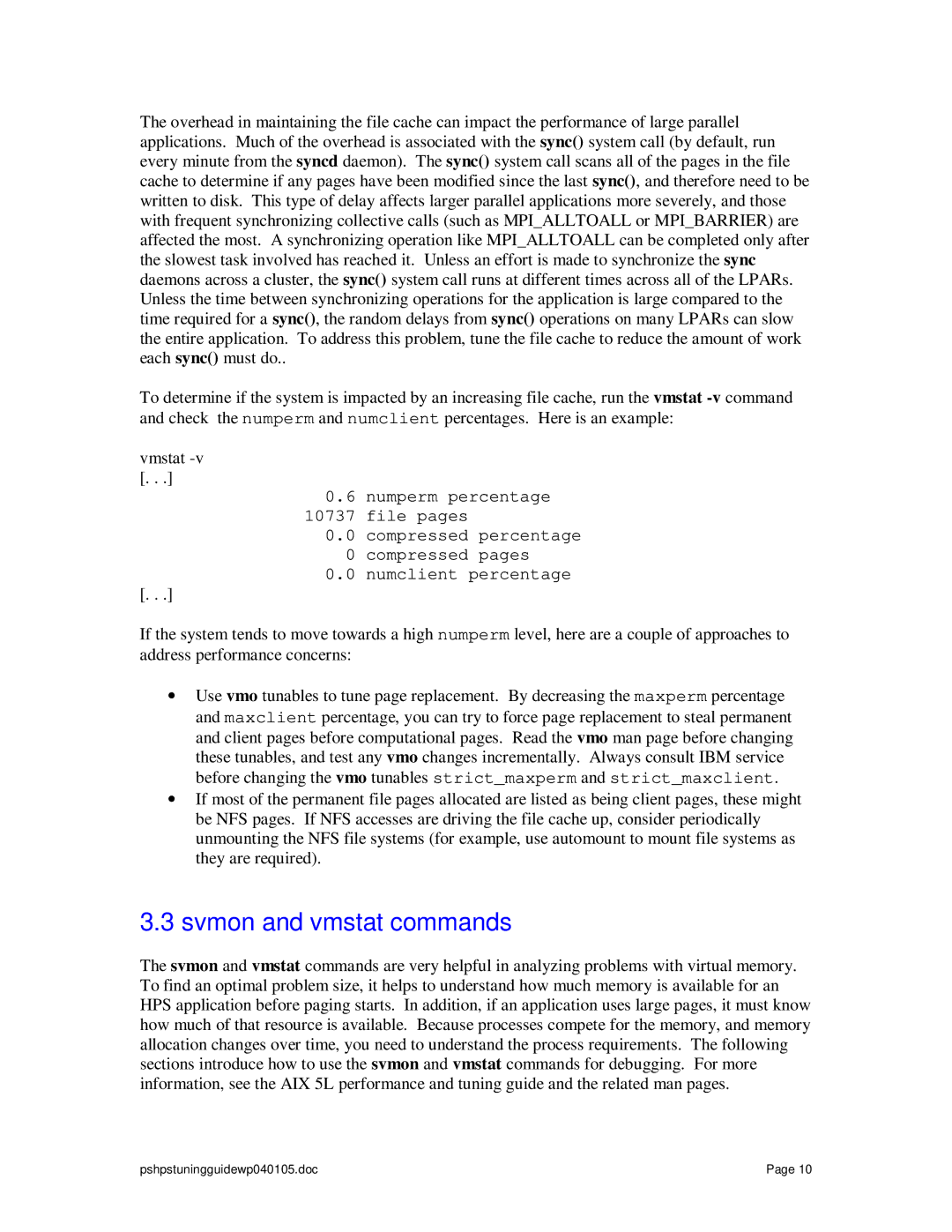

To determine if the system is impacted by an increasing file cache, run the vmstat

vmstat

0.6numperm percentage

10737 file pages

0.0compressed percentage

0 compressed pages

0.0numclient percentage

[. . .]

If the system tends to move towards a high numperm level, here are a couple of approaches to address performance concerns:

•Use vmo tunables to tune page replacement. By decreasing the maxperm percentage and maxclient percentage, you can try to force page replacement to steal permanent and client pages before computational pages. Read the vmo man page before changing these tunables, and test any vmo changes incrementally. Always consult IBM service before changing the vmo tunables strict_maxperm and strict_maxclient.

•If most of the permanent file pages allocated are listed as being client pages, these might be NFS pages. If NFS accesses are driving the file cache up, consider periodically unmounting the NFS file systems (for example, use automount to mount file systems as they are required).

3.3svmon and vmstat commands

The svmon and vmstat commands are very helpful in analyzing problems with virtual memory. To find an optimal problem size, it helps to understand how much memory is available for an HPS application before paging starts. In addition, if an application uses large pages, it must know how much of that resource is available. Because processes compete for the memory, and memory allocation changes over time, you need to understand the process requirements. The following sections introduce how to use the svmon and vmstat commands for debugging. For more information, see the AIX 5L performance and tuning guide and the related man pages.

pshpstuningguidewp040105.doc | Page 10 |