InfiniPath User Guide

InfiniPath User Guide Version

Index

Page

Table of Contents

Section Using InfiniPath MPI

Appendix a Benchmark Programs

InfiniPath User Guide Version

Appendix D Recommended Reading

InfiniPath Software Structure

InfiniPath User Guide Version IB6054601-00 D

Who Should Read this Guide

How this Guide is Organized

Interoperability

Switches

Overview

Appendix E Glossary of technical terms

What’s New in this Release

PathScale-QLogic Adapter Model Numbers

Supported Distributions and Kernels

InfiniPath/OpenFabrics Supported Distributions and Kernels

Software Components

This Guide uses these typographical conventions

Conventions Used in this Document

Documentation and Technical Support

InfiniPath product documentation includes

Introduction Documentation and Technical Support

Page

Installed Layout

Introduction

Memory Footprint

MPI include files are

Documentation can be found

Adapter Required Component Optional Memory Footprint Comment

Configuration and Startup Bios Settings

Memory Footprint, 331 MB per Node

InfiniPath Driver Software Configuration

InfiniPath Driver Startup

InfiniPath Driver Filesystem

Subnet Management Agent

Layered Ethernet Driver

Ipathether Configuration on Fedora and RHEL4

$ ifconfig -a $ ls /sys/class/net

Create or edit the following file as root

Ipathether Configuration on Suse 9.3, Sles 9, and Sles

ONBOOT=YES

If this file does not exist, skip to Step

Determine the MAC address that will be used

Guid can also be returned by running

# lsmod grep -q ipathether modprobe ipathether

Add the following lines to the file

# ifup eth2 # ifconfig eth2

OpenFabrics Configuration and Startup

Configuring the IPoIB Network Interface

# ifconfig ib0 10.1.17.3 netmask 0xffffff00

OpenSM

Starting and Stopping the InfiniPath Software

Further Information on Configuring and Loading Drivers

To enable the driver, use the command as root

You can stop it again like this

# /etc/init.d/infinipath start stop restart

# chkconfig infinipath off

# /sbin/chkconfig --list opensmd grep -w on

$ /sbin/lsmod grep ipathether

Configuring ssh and sshd Using shosts.equiv

Software Status

Change the file to mode 600 when finished editing

Performance and Management Tips

Remove Unneeded Services

Process Limitation with ssh

# killall -HUP sshd

Disable Powersaving Features

SDP Module Parameters for Best Performance

Balanced Processor Power

For Suse 9.3 and 10.0 run this command as root

CPU Affinity

Run the shell script ipathcontrol as follows

Hyper-Threading

Homogeneous Nodes

Ipathbughelper

Option -qwill query and -qawill query all

Non-QLogic built

For non-QLogic RPMs, it will look like

Command strings can also be used. Here is a sample

Customer Acceptance Utility

Will produce output like this

Displays help messages giving defined usage

Page

InfiniPath MPI

Other MPI Implementations

Getting Started with MPI

$ cp /usr/share/mpich/examples/basic

An Example C Program

There is more information on the mpihosts file in section

$ mpicc -o cpi cpi.c

$ mpif77 -o fpi3 fpi3.f

Examples Using Other Languages

Run it with

$ mpirun -np 2 -m mpihosts ./fpi3

Configuring MPI Programs for InfiniPath MPI

Run it

Configuring for ssh Using ssh-agent

InfiniPath MPI Details

Edit ~/.ssh/config so that it reads like this

This tells ssh that your key pair should let you

Compiling and Linking

Gets verbose output of all the commands in the script

Shows how to compile a program

Provides help

To use gcc for compiling and linking Fortran77 programs use

To Use Another Compiler

To use gcc for compiling and linking C++ programs use

To use PGI for Fortran90/Fortran95 programs, use

$ mpif90 -f90=ifort $ mpif95 -f95=ifort

Cross-compilation Issues

Compiler and Linker Variables

$ mpif90 -f90=pgf90 -show pi3f90.f90 -o pi3f90

Running MPI Programs

Mpihosts File

Console I/O in MPI Programs

Environment for Node Programs

Environment for Multiple Versions of InfiniPath or MPI

$ mpirun -np n -m mpihosts -rcfile mpirunrc program

Create up to specified number of processes per node

Multiprocessor Nodes

Number of processes to spawn

$ mpirun -np n -m mpihosts -ppn p program-name

Startup script for setting environment on nodes Default

Print MPI version. Default Off

Print mpirun help message. Default Off

Run each process in an xterm window. Default Off

Disable-mpi-progress-check

Sets the working directory for the node program

Using Other MPI Implementations

MPI Over uDAPL

# modprobe rdmacm # modprobe rdmaucm

MPD Description

Using MPD

$ mpdboot -f hostsfile

File I/O in MPI

MPI-IO with Romio

InfiniPath MPI and Hybrid MPI/OpenMP Applications

MPI Errors

Using Debuggers

Debugging MPI Programs

InfiniPath MPI Limitations

No ports available on /dev/ipath

Benchmark Programs

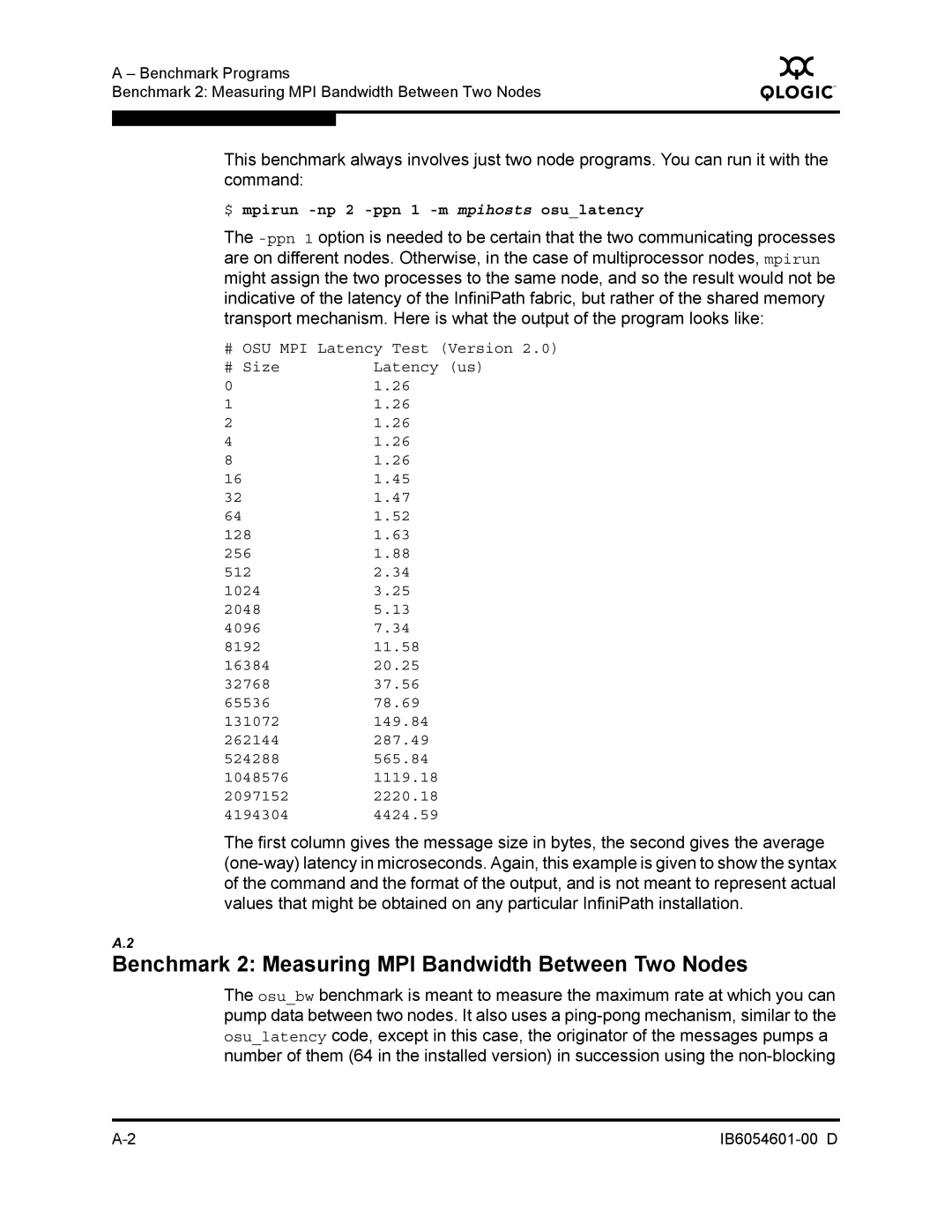

Benchmark 1 Measuring MPI Latency Between Two Nodes

Benchmark 2 Measuring MPI Bandwidth Between Two Nodes

$ mpirun -np 2 -ppn 1 -m mpihosts osulatency

Benchmark 3 Messaging Rate Microbenchmarks

$ mpirun -np 8 ./mpimultibw

Benchmark 4 Measuring MPI Latency in Host Rings

Might produce output like this

$ mpirun -np 4 -ppn 1 -m mpihosts mpilatency 100

Page

Batch Queuing Script

Allocating Resources

Generating the mpihosts File

$ batchmpirun -np n mympiprogram

# /sbin/fuser -v /dev/ipath

Clean Termination of MPI Processes

Simple Process Management

# lsof /dev/ipath

Lock Enough Memory on Nodes When Using Slurm

# /sbin/fuser -k /dev/ipath

# ulimit -l

Troubleshooting InfiniPath Adapter Installation

Mechanical and Electrical Considerations

Table C-1. LED Link and Data Indicators

Some HTX Motherboards May Need 2 or More CPUs in Use

Mtrr Mapping and Write Combining

Incorrect Mtrr Mapping

$ ipathpkttest -B

Incorrect Mtrr Mapping Causes Unexpected Low Bandwidth

Change Setting for Mapping Memory

Issue with SuperMicro H8DCE-HTe and QHT7040

To check your bandwidth try

Mpirun Installation Requires 32-bit Support

Software Installation Issues

Install Warning with RHEL4U2

OpenFabrics Dependencies

Check your distribution for the exact RPM name

Installing Newer Drivers from Other Distributions

Remove the InfiniPath kernel components with the command

$ rpm -e infinipath-kernel --nodeps

Reload all modules by using this command as root

Installing for Your Distribution

Kernel and Initialization Issues

These are used for SLES, SUSE, and Fedora

Kernel Needs CONFIGPCIMSI=y

Pcimsiquirk

Normal output will like similar to this

Driver Load Fails Due to Unsupported Kernel

InfiniPath Interrupts Not Working

$ grep ibipath /proc/interrupts

OpenFabrics Load Errors If ibipath Driver Load Fails

$ grep -i acpi /proc/cmdline

MPI Job Failures Due to Initialization Problems

InfiniPath ibipath Initialization Failure

System Administration Troubleshooting

OpenFabrics Issues

Load and Configure IPoIB Before Loading SDP

Stop OpenSM Before Stopping/Restarting InfiniPath

InfiniPath MPI Troubleshooting

Performance Issues

Mvapich Performance Issues

Broken Intermediate Link

$ mpirun

$ mpirun-ipath-ssh -np 2 -ppn 1 -m ~/tmp/idev osulatency

$ export MPICHCC=gcc $ mpicc mpiworld.c

Compiler/Linker Mismatch

Compiler Can’t Find Include, Module or Library Files

$ mpicc myprogram.c

$ mpicc myprogram.c -I/path/to/devel/include

Compiling on Development Nodes

Specifying the Run-time Library Path

$ mpif90 myprogramf90.f90 -I/path/to/devel/include

Run Time Errors With Different MPI Implementations

# /etc/ldconfig

Troubleshooting InfiniPath MPI Troubleshooting

Using MPI.mod Files

When compiling, use descriptive names for the object files

HP-MPI mpirun and executable used together

Extending MPI Modules

Troubleshooting InfiniPath MPI Troubleshooting

Error Messages Generated by mpirun

Messages from the InfiniPath Library

$ mpirun -m ~/tmp/sm -np 2 -mpilatency 1000

Troubleshooting InfiniPath MPI Troubleshooting

MPI Messages

Following indicates an unknown host

There is no route to a valid host

$ mpirun -np 2 -m ~/tmp/q mpilatency 100

There is no route to any host

One program on one node died

$ mpirun -np 2 -m ~/tmp/q mpilatency 100000

Driver and Link Error Messages Reported by MPI Programs

$ mpirun -np ~/tmp/q -q 60 mpilatency 1000000

MPI Stats

Check Cluster Homogeneity with ipathcheckout

These two commands perform the same functions

Useful Programs and Files for Debugging

Restarting InfiniPath

Summary of Useful Programs and Files

Shell script that gathers status

Example contents are

Modprobe

Ibstatus

Ipathcheckout

Ipathcontrol

Ipathbug-helper

# lsmod egrep ’ipathibrdmafindex’

To check the contents of an RPM, use these commands

Option-qwill query and --qawill query all

$ mpirun -np 2 -m /tmp/id1 -d0x101 mpilatency 1

Table C-3. statusstr File

Command strings can also be used. Its format is as follows

Example contents for QLogic-built drivers

Reference and Source for Slurm

References for MPI

Books for Learning MPI Programming

InfiniBand OpenFabrics

Clusters

Rocks

Glossary

GID

LID

Mtrr

SDP

Glossary IB6054601-00 D

Index

Configuration of on Suse and Sles

OpenFabrics Configuration