SMP Configuration Options

BladeSymphony 1000 supports two socket (four-core) Intel Itanium Server Blades that can be scaled to offer up to two 16 core servers in a single chassis or eight four core servers, or a mixture of SMP and single module systems, thus reducing footprint and power consumption while increasing utilization and flexibility. SMP provides higher performance for applications that can utilize large memory and multiple processors, such as large databases or visualization applications.

The maximum SMP configuration supported by BladeSymphony 1000 is:

•Four Dual Core Intel Itanium Server Blades for a total of 16 CPU cores

•256 GB memory (64 GB per server blades x 4)

•Eight gigabit NICs (2 on-board per server blade) connected to two internal gigabit Ethernet switches

•Eight PCI-X slots (or 16 PCI-X slots with chassis B)

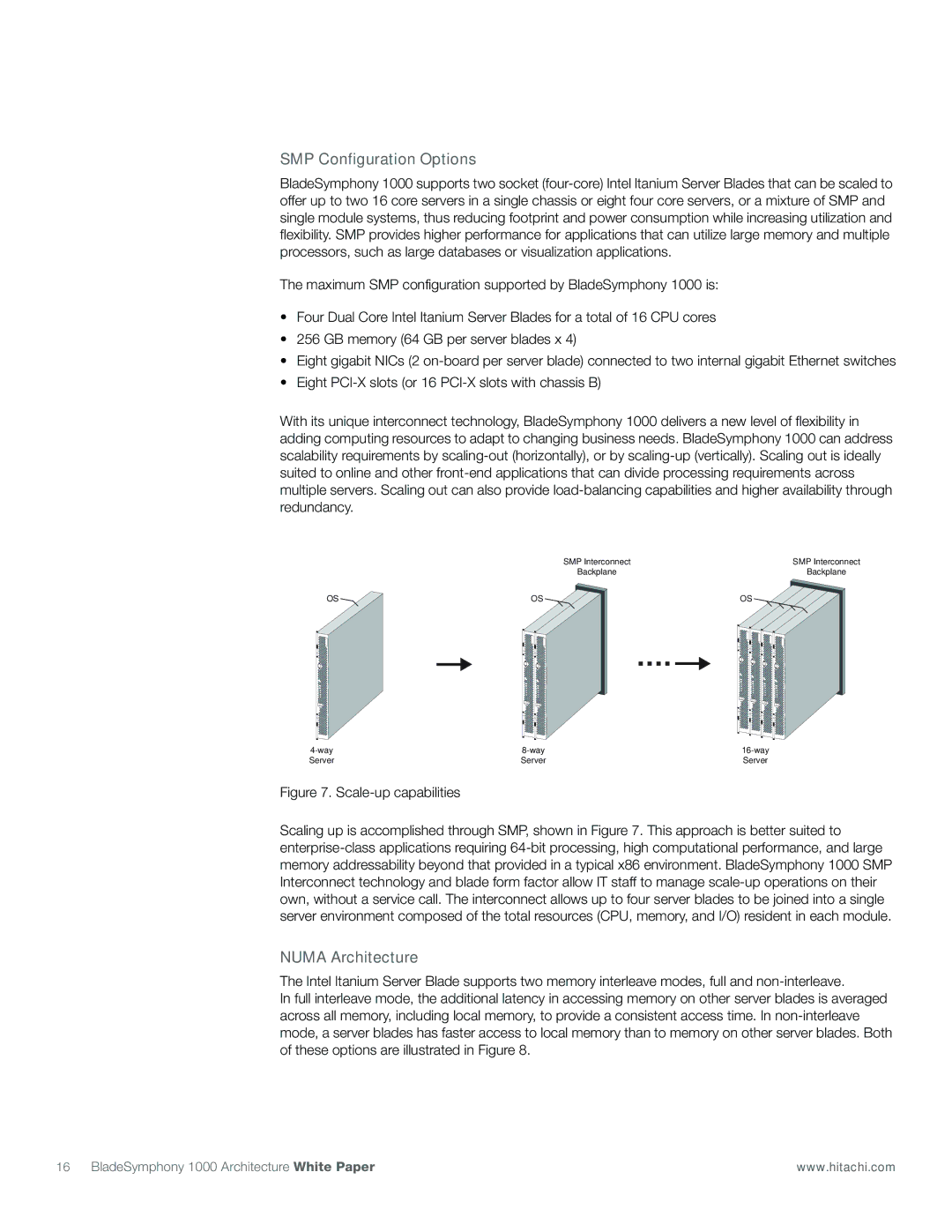

With its unique interconnect technology, BladeSymphony 1000 delivers a new level of flexibility in adding computing resources to adapt to changing business needs. BladeSymphony 1000 can address scalability requirements by scaling-out (horizontally), or by scaling-up (vertically). Scaling out is ideally suited to online and other front-end applications that can divide processing requirements across multiple servers. Scaling out can also provide load-balancing capabilities and higher availability through redundancy.

| SMP Interconnect | SMP Interconnect |

| Backplane | Backplane |

OS | OS | OS |

4-way | 8-way | 16-way |

Server | Server | Server |

Figure 7. Scale-up capabilities

Scaling up is accomplished through SMP, shown in Figure 7. This approach is better suited to enterprise-class applications requiring 64-bit processing, high computational performance, and large memory addressability beyond that provided in a typical x86 environment. BladeSymphony 1000 SMP Interconnect technology and blade form factor allow IT staff to manage scale-up operations on their own, without a service call. The interconnect allows up to four server blades to be joined into a single server environment composed of the total resources (CPU, memory, and I/O) resident in each module.

NUMA Architecture

The Intel Itanium Server Blade supports two memory interleave modes, full and non-interleave.

In full interleave mode, the additional latency in accessing memory on other server blades is averaged across all memory, including local memory, to provide a consistent access time. In non-interleave mode, a server blades has faster access to local memory than to memory on other server blades. Both of these options are illustrated in Figure 8.