Version 1.0, 4/10/02

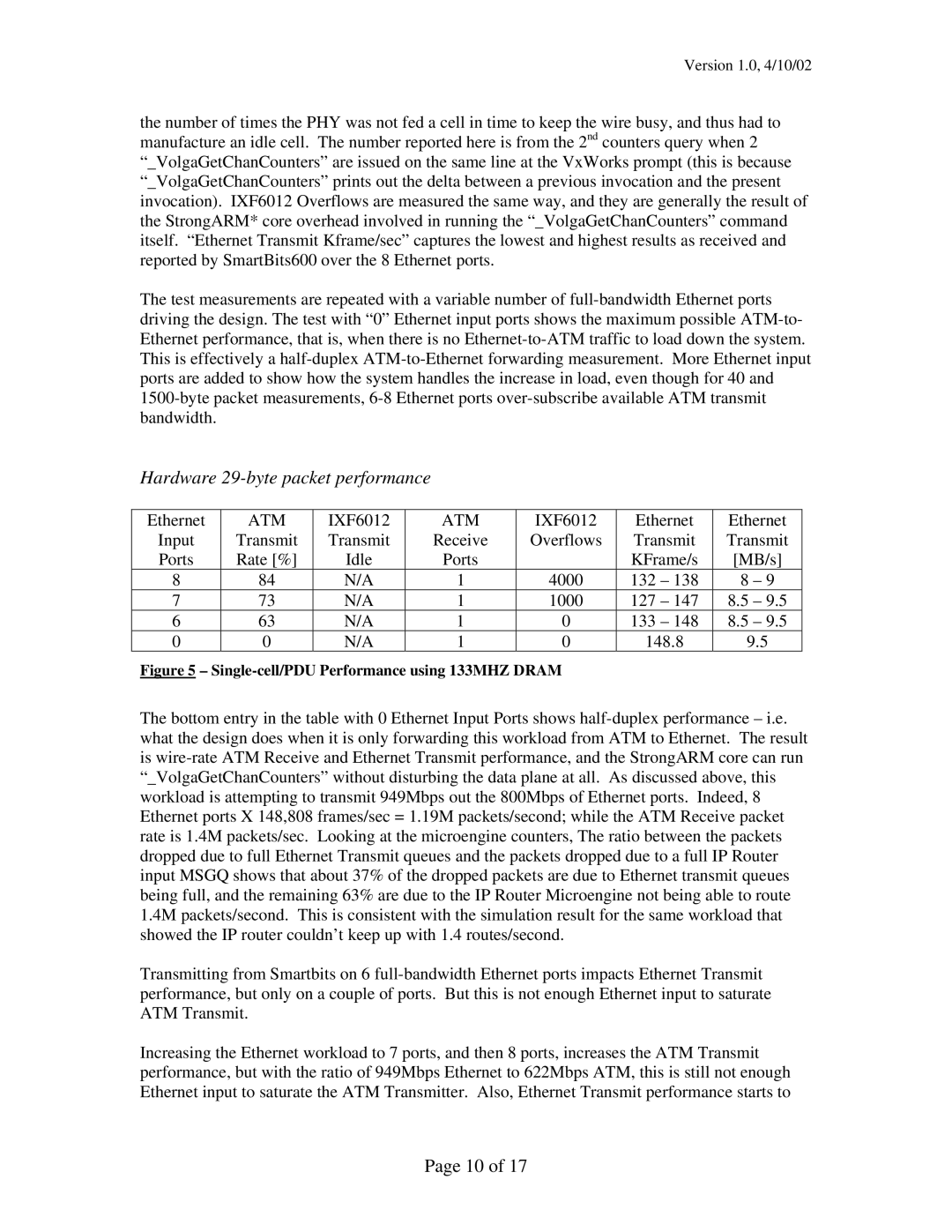

the number of times the PHY was not fed a cell in time to keep the wire busy, and thus had to manufacture an idle cell. The number reported here is from the 2nd counters query when 2 “_VolgaGetChanCounters” are issued on the same line at the VxWorks prompt (this is because “_VolgaGetChanCounters” prints out the delta between a previous invocation and the present invocation). IXF6012 Overflows are measured the same way, and they are generally the result of the StrongARM* core overhead involved in running the “_VolgaGetChanCounters” command itself. “Ethernet Transmit Kframe/sec” captures the lowest and highest results as received and reported by SmartBits600 over the 8 Ethernet ports.

The test measurements are repeated with a variable number of

Hardware 29-byte packet performance

Ethernet | ATM | IXF6012 | ATM | IXF6012 | Ethernet | Ethernet | |

Input | Transmit | Transmit | Receive | Overflows | Transmit | Transmit | |

Ports | Rate [%] | Idle | Ports |

| KFrame/s | [MB/s] | |

8 | 84 | N/A | 1 | 4000 | 132 – 138 | 8 | – 9 |

7 | 73 | N/A | 1 | 1000 | 127 – 147 | 8.5 | – 9.5 |

6 | 63 | N/A | 1 | 0 | 133 – 148 | 8.5 | – 9.5 |

0 | 0 | N/A | 1 | 0 | 148.8 | 9.5 | |

Figure 5 – Single-cell/PDU Performance using 133MHZ DRAM

The bottom entry in the table with 0 Ethernet Input Ports shows

Transmitting from Smartbits on 6

Increasing the Ethernet workload to 7 ports, and then 8 ports, increases the ATM Transmit performance, but with the ratio of 949Mbps Ethernet to 622Mbps ATM, this is still not enough Ethernet input to saturate the ATM Transmitter. Also, Ethernet Transmit performance starts to

Page 10 of 17