Using Serviceguard Extension for RAC Version A.11.20

Legal Notices

Contents

Contents

Support of Oracle RAC ASM with SGeRAC

SGeRAC Toolkit for Oracle RAC 10g or later

Maintenance 113

Index 153

Troubleshooting 138 Software Upgrades 139

Blank Planning Worksheets 151

Advantages of using SGeRAC

User Guide Overview

Problem Reporting

Typographical Conventions

Where to find Documentation on the Web

Introduction to Serviceguard Extension for RAC

What is a Serviceguard Extension for RAC Cluster?

Using Packages in a Cluster

Group Membership

Serviceguard Extension for RAC Architecture

Group Membership Daemon

Storage Configuration Options

Serviceguard Extension for RAC

Package Dependencies

About Veritas CFS and CVM from Symantec

Overview of SGeRAC and Oracle 10g, 11gR1, and 11gR2 RAC

SMS bundle

Overview of SGeRAC Cluster Interconnect Subnet Monitoring

How Cluster Interconnect Subnet Works

Configuring Packages for Oracle RAC Instances

Configuring Packages for Oracle Listeners

Node Failure

Before Node Failure

Larger Clusters

Up to Four Nodes with Scsi Storage

Point-to-Point Connections to Storage Devices

Four-Node RAC Cluster

GMS Authorization

Eight-Node Cluster with EVA, XP or EMC Disk Array

Overview of Serviceguard Manager

Starting Serviceguard Manager

Monitoring Clusters with Serviceguard Manager

Administering Clusters with Serviceguard Manager

Configuring Clusters with Serviceguard Manager

Cluster Timeouts

Serviceguard Cluster Timeout

Interface Areas

Group Membership API NMAPI2

Oracle Cluster Software

Shared Storage

Listener

Automated Startup and Shutdown

RAC Instances

Network Monitoring

Network Planning for Cluster Communication

Manual Startup and Shutdown

Planning Storage for Oracle Cluster Software

Planning Storage for Oracle 10g/11gR1/11gR2 RAC

Storage Planning with CFS

Volume Planning with Slvm

Volume Planning with CVM

RAW Logical Volume Name Size MB

About Multipathing

Installing Serviceguard Extension for RAC

About Device Special Files

Where cDSFs Reside

About Cluster-wide Device Special Files cDSFs

Configuration File Parameters

Cluster Communication Network Monitoring

Single Network for Cluster Communications

Single Network for Cluster Communications

SG-HB/RAC-IC Traffic Separation

Alternate Configuration-Multiple RAC Databases

Guidelines for Changing Cluster Parameters

When Cluster Interconnect Subnet Monitoring is used

When Cluster Interconnect Subnet Monitoring is not Used

Cluster Interconnect Monitoring Restrictions

Creating a Storage Infrastructure with LVM

Limitations of Cluster Communication Network Monitor

HP Serviceguard Extension for RAC

Building Volume Groups for RAC on Mirrored Disks

Creating Volume Groups and Logical Volumes

Building Mirrored Logical Volumes for RAC with LVM Commands

# mkdir /dev/vgrac

# mknod /dev/vgrac/group c 64 0xhh0000

# ls -l /dev/*/group

# lvcreate -m 1 -M y -s g -n system.dbf -L 408 dev/vgrac

Creating Mirrored Logical Volumes for RAC Data Files

# lvcreate -m 1 -M y -s g -n redo1.log -L 408 /dev/vgrac

Creating RAC Volume Groups on Disk Arrays

Creating Logical Volumes for RAC on Disk Arrays

Oracle Demo Database Files

Exporting with LVM Commands

Displaying the Logical Volume Infrastructure

Exporting the Logical Volume Infrastructure

Installing Oracle Real Application Clusters

Creating a Storage Infrastructure with CFS

# cmruncl # cmviewcl

Initializing the Veritas Volume Manager

# cmapplyconf -C clm.asc

# cfscluster config -s

# vxdctl -c mode

# /etc/vx/bin/vxdisksetup -i c4t4d0

# vxdg -s init cfsdg1 c4t4d0

# newfs -F vxfs /dev/vx/rdsk/cfsdg1/vol2

# newfs -F vxfs /dev/vx/rdsk/cfsdg1/vol3

# cfsmntadm add cfsdg1 vol1 /cfs/mnt1 all=rw

#cfsmntadm add cfsdg1 vol2 /cfs/mnt2 all=rw

Deleting CFS from the Cluster

# bdf grep cfs

# cfsmntadm delete /cfs/mnt1

# cfsmntadm delete /cfs/mnt2

# cfscluster unconfig

# vxinstall

Creating a Storage Infrastructure with CVM

# cfsdgadm delete cfsdg1

Using CVM 5.x or later

# vxdg -s init opsdg c4t4d0

Using CVM

Preparing the Cluster for Use with CVM

# vxedit set diskdetpolicy=global DiskGroupName

# cmapplyconf -P /etc/cmcluster/cvm/VxVM-CVM-pkg.conf

# /usr/lib/vxvm/bin/vxdisksetup -i /dev/dsk/c0t3d2

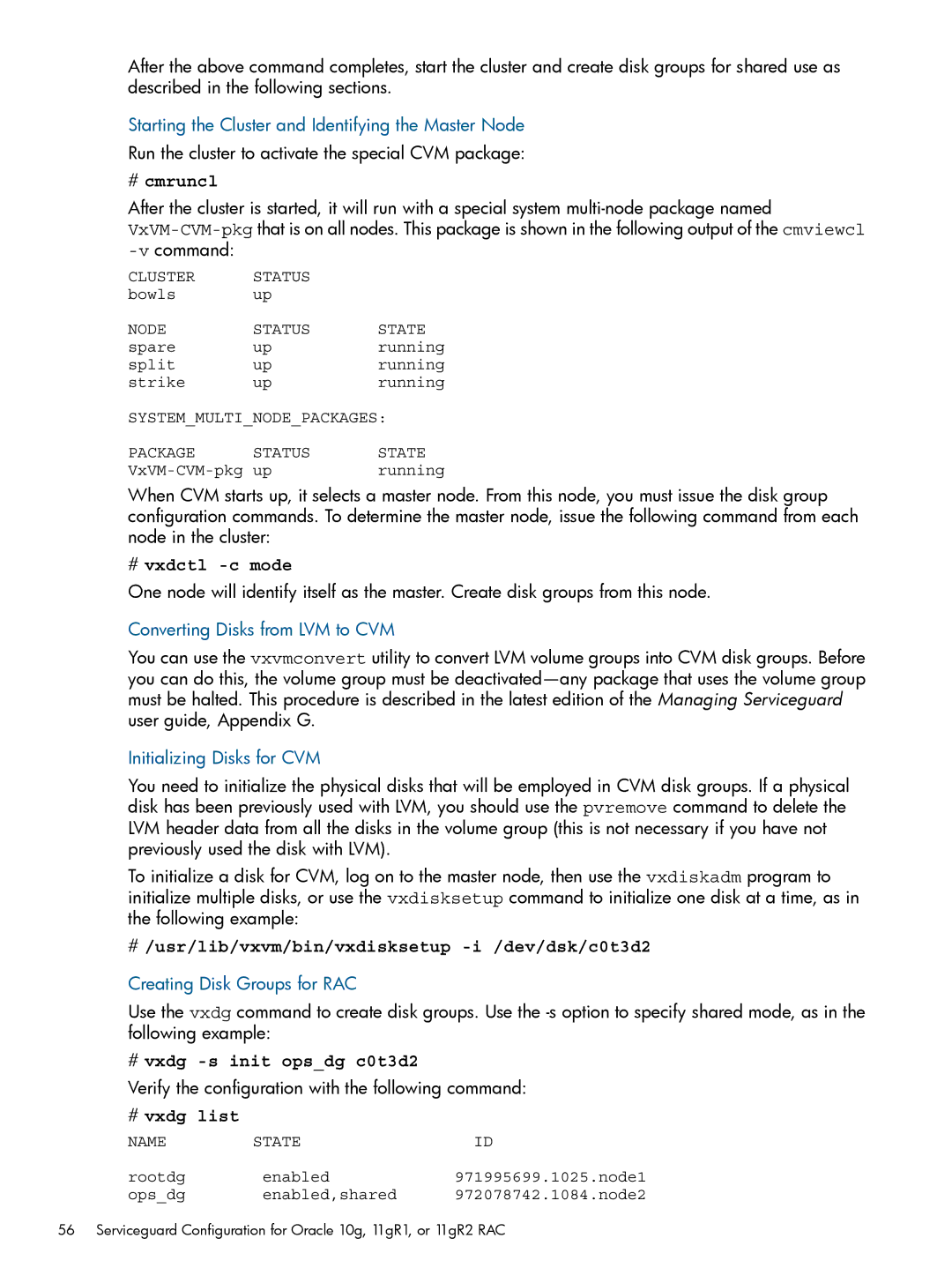

# cmruncl

# vxdg -s init opsdg c0t3d2

# vxdg list

Creating Volumes

Oracle Demo Database Files

Mirror Detachment Policies with CVM

# vxassist -g opsdg make logfiles 1024m

Adding Disk Groups to the Cluster Configuration

# groupadd oinstall # groupadd dba # groupadd oper

# useradd -u 203 -g oinstall -G dba,oper oracle

# passwd oracle

# mkdir -p /mnt/app/crs/oracle/product/10.2.0/crs

# usermod -d /cfs/mnt1/oracle oracle

# chmod 755 /dev/vgrac

Mnt/app/oracle/oradata/ver10/ver10raw.conf

Installing Oracle 10g, 11gR1, or 11gR2 Cluster Software

Installing Oracle 10g/11gR1/11gR2 RAC Binaries

Installing on Local File System

Installing RAC Binaries on a Local File System

Installing RAC Binaries on Cluster File System

Creating a RAC Demo Database

Creating a RAC Demo Database on Slvm or CVM

$ netca

#swlist VRTSodm

Verifying Oracle Disk Manager is Configured

Creating a RAC Demo Database on CFS

Configuring Oracle to Use Oracle Disk Manager Library

Verify that Oracle Disk Manager is Running

#ll -L /opt/VRTSodm/lib/libodm.sl

# cat /dev/odm/stats

# kcmodule -P state odm

$ cd $ORACLEHOME/lib

Preparing Oracle Cluster Software for Serviceguard Packages

Configure Serviceguard Packages

$ORACRSHOME/bin/crsctl disable crs

$ORACLEHOME/bin/srvctl modify database -d dbname -y manual

Sbin/init.d/init.crs start

Why ASM over SLVM?

Introduction

SG/SGeRAC Support for ASM on HP-UX 11i

Overview

Configuring Slvm Volume Groups for ASM Disk Groups

Sample Command Sequence for Configuring Slvm Volume Groups

Support of Oracle RAC ASM with SGeRAC

ASM over Slvm

ASM support with SG/SGeRAC A.11.17.01 or later

Configuring Slvm Volume Groups for ASM Disk Groups

Sample Command Sequence for Configuring Slvm Volume Groups

ASM over Raw disk

Additional Hints on ASM Integration with SGeRAC

ASM may require Modified Backup/Restore Procedures

Installation, Configuration, Support, and Troubleshooting

Additional Documentation on the Web and Scripts

SGeRAC Toolkit for Oracle RAC 10g or later

Background

Background

SGeRAC Toolkit for Oracle RAC 10g or later

Page

Serviceguard Extension for RAC Toolkit operation

Startup and shutdown of the combined Oracle RAC-SGeRAC stack

SGeRAC Toolkit for Oracle RAC 10g or later

Asmdg MNP

Use Case 2 Setup

Use case 3 Database storage in ASM over Slvm

Use Case 1 Performing maintenance with Oracle Clusterware

Use Case 2 performing maintenance with ASM disk groups

SGeRAC Toolkit for Oracle RAC 10g or later

Internal structure of SGeRAC for Oracle Clusterware

Contents

Support for the SGeRAC Toolkit

Support for the SGeRAC Toolkit

CFS-DG1-MNP CFS-DG2-MNP

Readme

SGeRAC Toolkit for Oracle RAC 10g or later

DEPENDENCYCONDITIONDG-MNP-PKG=UP Dependencylocationsamenode

ORACLEHOME, CHECKINTERVAL...MAINTENANCEFLAG

Dependencyname

DEPENDENCYCONDITIONOC-MNP-PKG=UP Dependencylocationsamenode

Set to any name desired for the RAC MNP

For a package using CFS

Oracrshome

Asmdisk Group

Asmtkitdir

SGeRAC Toolkit for Oracle RAC 10g or later

Asmdg MNP

SGeRAC Toolkit for Oracle RAC 10g or later

Support for the SGeRAC Toolkit

SGeRAC Toolkit for Oracle RAC 10g or later

DEPENDENCYCONDITIONOC-DGMP-PKG=UP Dependencylocationsamenode

Conclusion

Additional Documentation on the Web

# cmviewcl -r A.11.16

Types of Cluster and Package States

Examples of Cluster and Package States

Name Status State

Types of Cluster and Package States

Package Status and State

Cluster Status

Node Status and State

Status of Group Membership

Package Switching Attributes

Service Status

Examples of Cluster and Package States

Network Status

Failover and Failback Policies

Normal Running Status

Quorum Server Status

CVM Package Status

Output of the cmviewcl -vcommand is as follows

Status After Moving the Package to Another Node

Viewing Data on Unowned Packages

Status After Package Switching is Enabled

Status After Halting a Node

Checking the Cluster Configuration and Components

Status Nodename Name

Checking Cluster Components

Verifying Cluster Components

Cmcheckconf -P

Setting up Periodic Cluster Verification

Example

Online Node Addition and Deletion

Online Reconfiguration

Limitations

Making LVM Volume Groups Shareable

Managing the Shared Storage

Activating an LVM Volume Group in Shared Mode

Making Offline Changes to Shared Volume Groups

Making a Volume Group Unshareable

Deactivating a Shared Volume Group

On node 2, issue the following command

Changing the CVM Storage Configuration

Removing Serviceguard Extension for RAC from a System

Adding Additional Shared LVM Volume Groups

Using Event Monitoring Service

Using EMS Hardware Monitors

Monitoring Hardware

Adding Disk Hardware

Replacing a Mechanism in a Disk Array Configured with LVM

# vgcfgrestore /dev/vgsg01 /dev/dsk/c2t3d0

Replacing Disks

# lvreduce -m 0 /dev/vgsg01/lvolname /dev/dsk/c2t3d0

# vgcfgrestore -n vg name pv raw path

# lvextend -m 1 /dev/vgsg01 /dev/dsk/c2t3d0

# lvsync /dev/vgsg01/lvolname

# pvchange -a n pv path

Online Hardware Maintenance with Inline Scsi Terminator

Replacing a Lock Disk

W Scsi Buses with Inline Terminators

Replacement of I/O Cards

Replacement of LAN Cards

Offline Replacement

Online Replacement

Monitoring RAC Instances

Troubleshooting

Software Upgrades

Rolling Software Upgrades

Upgrading Serviceguard to SGeRAC cluster

Cmhaltnode nodename

Steps for Rolling Upgrades

Autostartcmcld =

Example of Rolling Upgrade

Keeping Kernels Consistent

Running Cluster Before Rolling Upgrade

Step

Node 1 Upgraded to SG/SGeRAC

# cmhaltnode -f node2

Limitations of Rolling Upgrades

Running Cluster After Upgrades

Migrating an SGeRAC Cluster with Cold Install

Non-Rolling Software Upgrades

Limitations of Non-Rolling Upgrades

Upgrade Using DRD

Rolling Upgrade Using DRD

Non-Rolling Upgrade Using DRD

Restrictions for DRD Upgrades

Software Upgrades

LVM Volume Group and Physical Volume Worksheet

Oracle Logical Volume Worksheet

Blank Planning Worksheets

Index

RAC

155