Section: 3.4 Suspending and resuming jobs

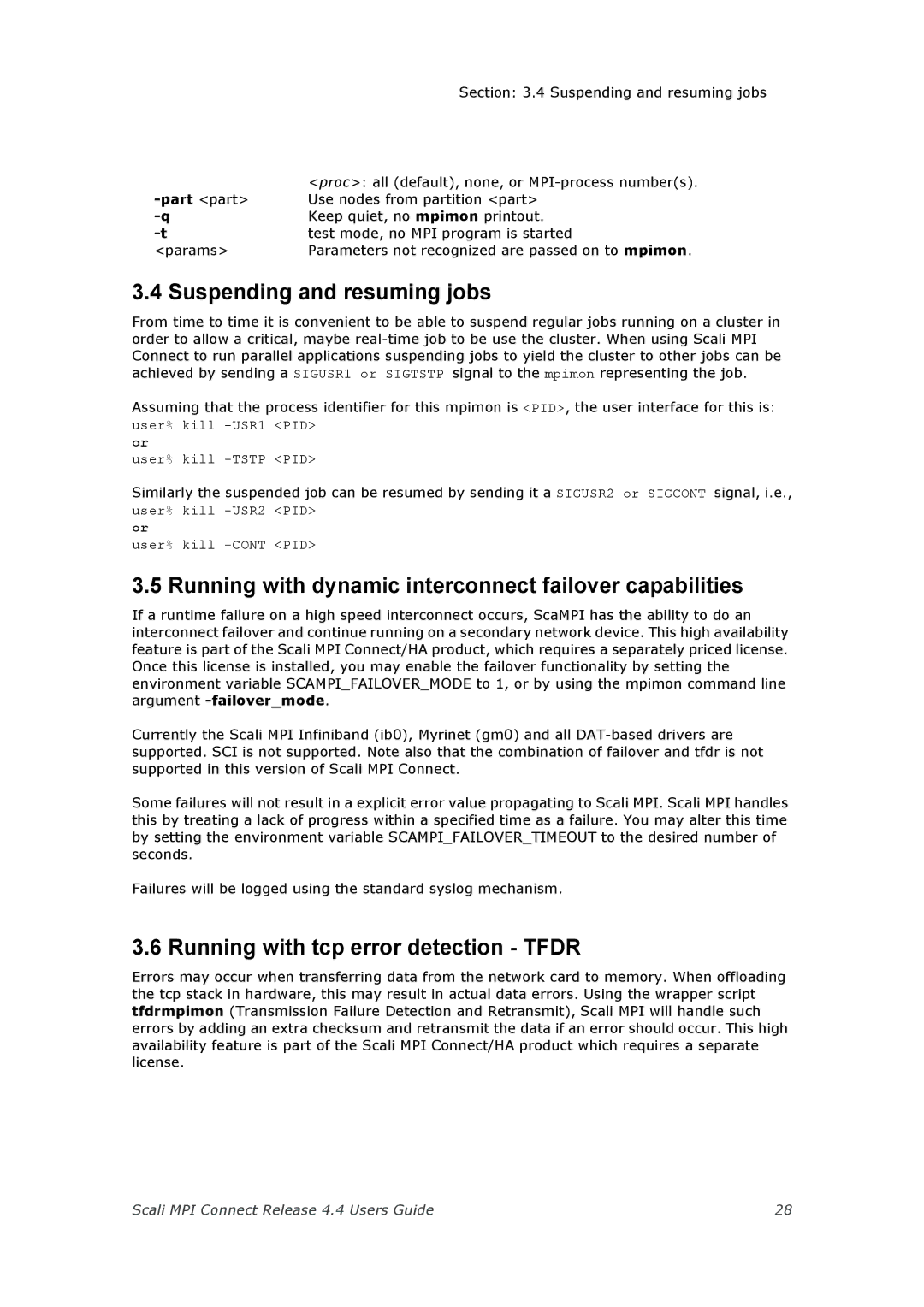

| <proc>: all (default), none, or |

| Use nodes from partition <part> |

Keep quiet, no mpimon printout. | |

test mode, no MPI program is started | |

<params> | Parameters not recognized are passed on to mpimon. |

3.4 Suspending and resuming jobs

From time to time it is convenient to be able to suspend regular jobs running on a cluster in order to allow a critical, maybe

Assuming that the process identifier for this mpimon is <PID>, the user interface for this is:

user% kill

user% kill

Similarly the suspended job can be resumed by sending it a SIGUSR2 or SIGCONT signal, i.e.,

user% kill

user% kill

3.5 Running with dynamic interconnect failover capabilities

If a runtime failure on a high speed interconnect occurs, ScaMPI has the ability to do an interconnect failover and continue running on a secondary network device. This high availability feature is part of the Scali MPI Connect/HA product, which requires a separately priced license. Once this license is installed, you may enable the failover functionality by setting the environment variable SCAMPI_FAILOVER_MODE to 1, or by using the mpimon command line argument

Currently the Scali MPI Infiniband (ib0), Myrinet (gm0) and all

Some failures will not result in a explicit error value propagating to Scali MPI. Scali MPI handles this by treating a lack of progress within a specified time as a failure. You may alter this time by setting the environment variable SCAMPI_FAILOVER_TIMEOUT to the desired number of seconds.

Failures will be logged using the standard syslog mechanism.

3.6 Running with tcp error detection - TFDR

Errors may occur when transferring data from the network card to memory. When offloading the tcp stack in hardware, this may result in actual data errors. Using the wrapper script tfdrmpimon (Transmission Failure Detection and Retransmit), Scali MPI will handle such errors by adding an extra checksum and retransmit the data if an error should occur. This high availability feature is part of the Scali MPI Connect/HA product which requires a separate license.

Scali MPI Connect Release 4.4 Users Guide | 28 |