Section: 3.3 Running Scali MPI Connect programs

<pid> | is the Unix process identifier of the monitor program mpimon. |

<nodename> | is the name of the node where mpimon is running. |

Note: SMC requires a homogenous file system image, i.e. a file system providing the same path and program names on all nodes of the cluster on which SMC is installed.

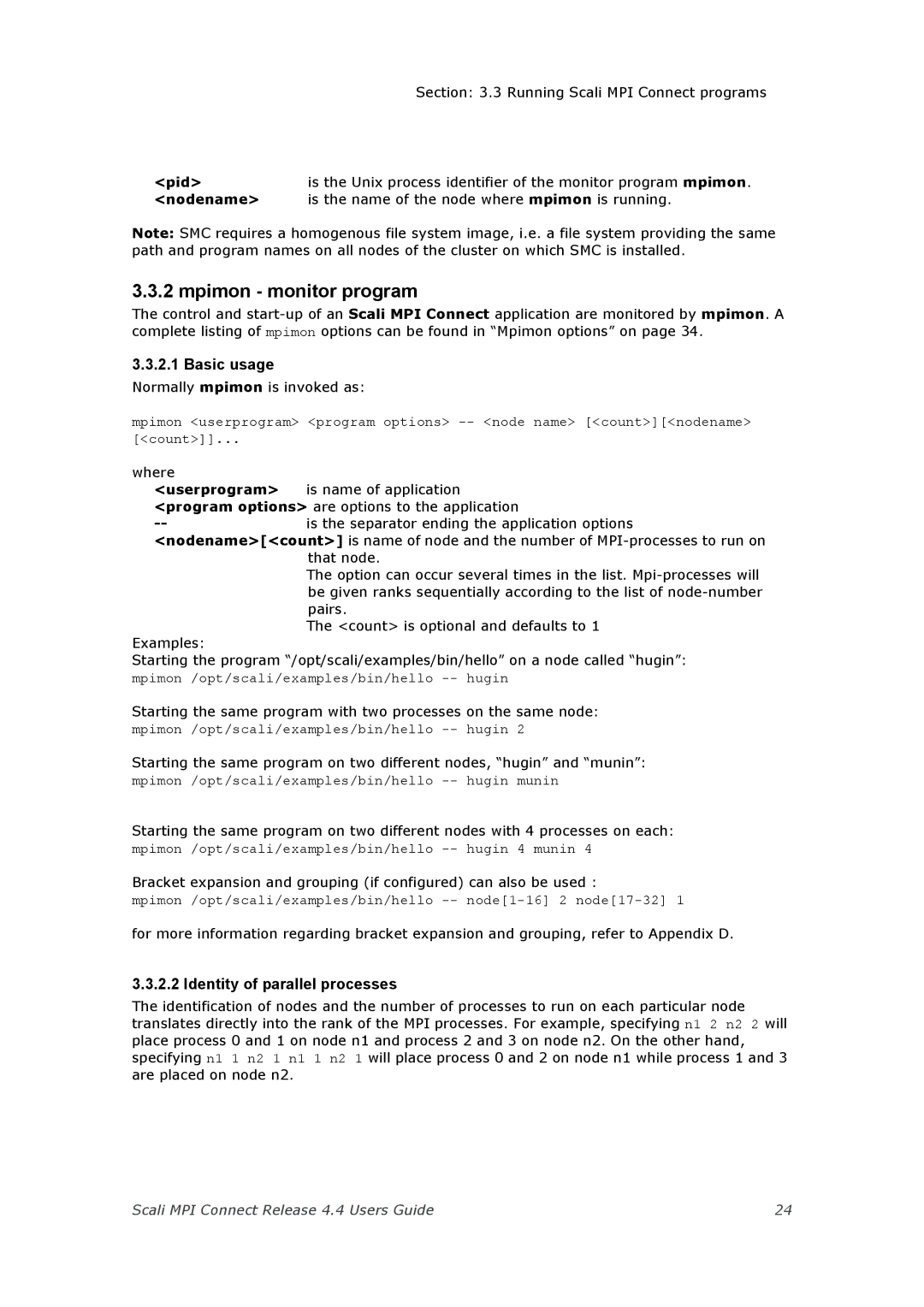

3.3.2 mpimon - monitor program

The control and

3.3.2.1 Basic usage

Normally mpimon is invoked as:

mpimon <userprogram> <program options>

[<count>]]...

where

<userprogram> | is name of application |

<program options> are options to the application | |

is the separator ending the application options | |

<nodename>[<count>] is name of node and the number of

The option can occur several times in the list.

The <count> is optional and defaults to 1

Examples:

Starting the program “/opt/scali/examples/bin/hello” on a node called “hugin”:

mpimon /opt/scali/examples/bin/hello

Starting the same program with two processes on the same node:

mpimon /opt/scali/examples/bin/hello

Starting the same program on two different nodes, “hugin” and “munin”:

mpimon /opt/scali/examples/bin/hello

Starting the same program on two different nodes with 4 processes on each:

mpimon /opt/scali/examples/bin/hello

Bracket expansion and grouping (if configured) can also be used :

mpimon /opt/scali/examples/bin/hello

for more information regarding bracket expansion and grouping, refer to Appendix D.

3.3.2.2 Identity of parallel processes

The identification of nodes and the number of processes to run on each particular node translates directly into the rank of the MPI processes. For example, specifying n1 2 n2 2 will place process 0 and 1 on node n1 and process 2 and 3 on node n2. On the other hand, specifying n1 1 n2 1 n1 1 n2 1 will place process 0 and 2 on node n1 while process 1 and 3 are placed on node n2.

Scali MPI Connect Release 4.4 Users Guide | 24 |