The output for this command could also have been 1 core on each of 4 compute nodes in the SLURM allocation.

Submitting a Non-MPI Parallel Job

Use the following format of the LSF bsub command to submit a parallel job that does not make use of

bsub

The bsub command submits the job to

The

The SLURM srun command is the user job launched by the LSF bsub command. SLURM launches the jobname in parallel on the reserved cores in the lsf partition.

The jobname parameter is the name of an executable file or command to be run in parallel.

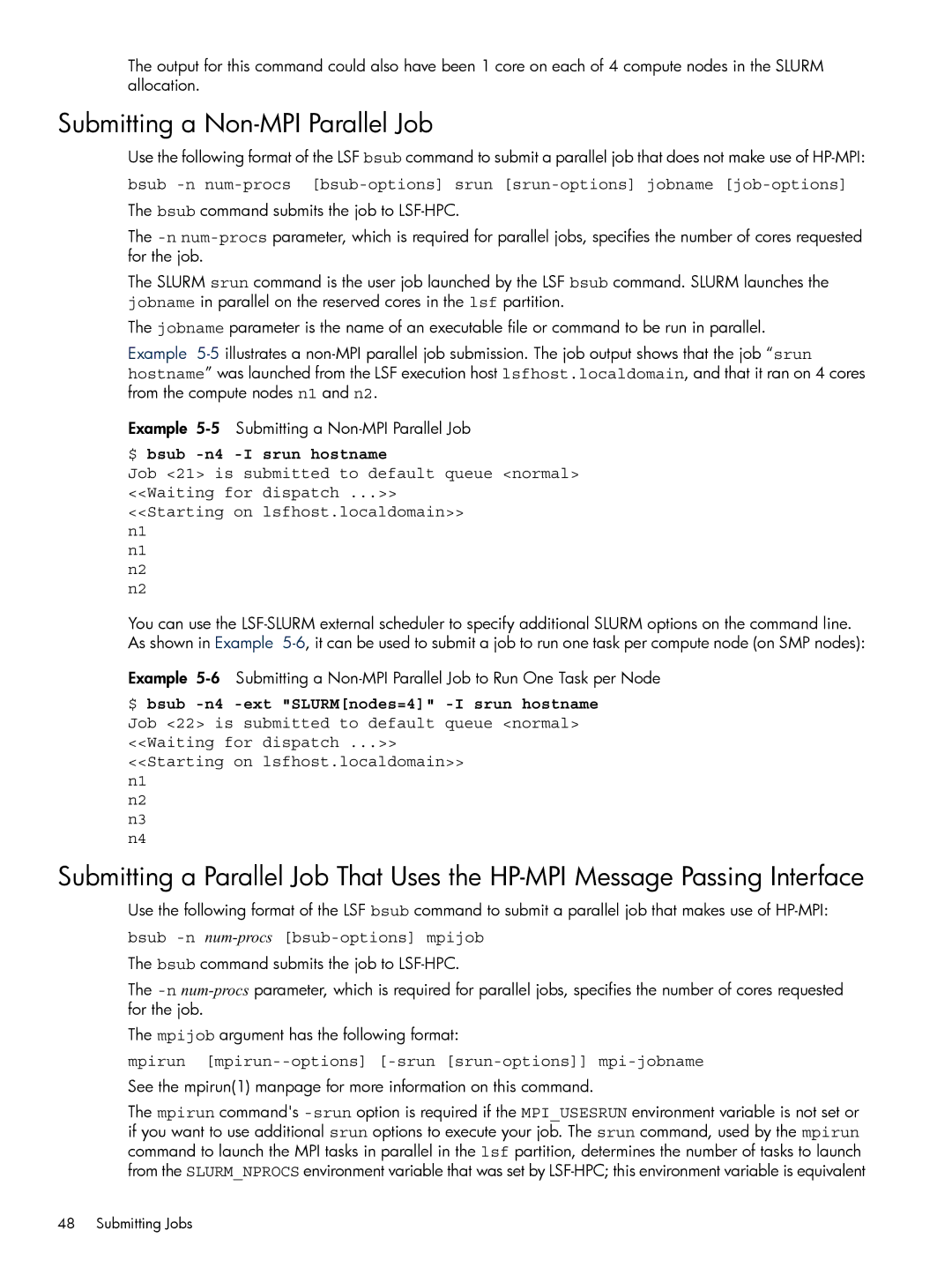

Example

Example

$ bsub -n4 -I srun hostname

Job <21> is submitted to default queue <normal> <<Waiting for dispatch ...>>

<<Starting on lsfhost.localdomain>> n1

n1

n2

n2

You can use the

Example

$ bsub

<<Starting on lsfhost.localdomain>> n1

n2

n3

n4

Submitting a Parallel Job That Uses the

Use the following format of the LSF bsub command to submit a parallel job that makes use of

bsub -n num-procs [bsub-options] mpijob

The bsub command submits the job to

The

The mpijob argument has the following format:

mpirun [mpirun--options] [-srun [srun-options]] mpi-jobname

See the mpirun(1) manpage for more information on this command.

The mpirun command's

48 Submitting Jobs