about running jobs. Refer to "Submitting a Batch Job or Job Script" | for information about running scripts. |

bsub |

|

This is the bsub command format to submit a parallel job to an

"Submitting a

This is the bsub command format to submit an

bsub -n num-procs -ext "SLURM[slurm-arguments]" \

[bsub-options] [srun [srun-options]] jobname [job-options]

This is the bsub command format to submit a parallel job to an

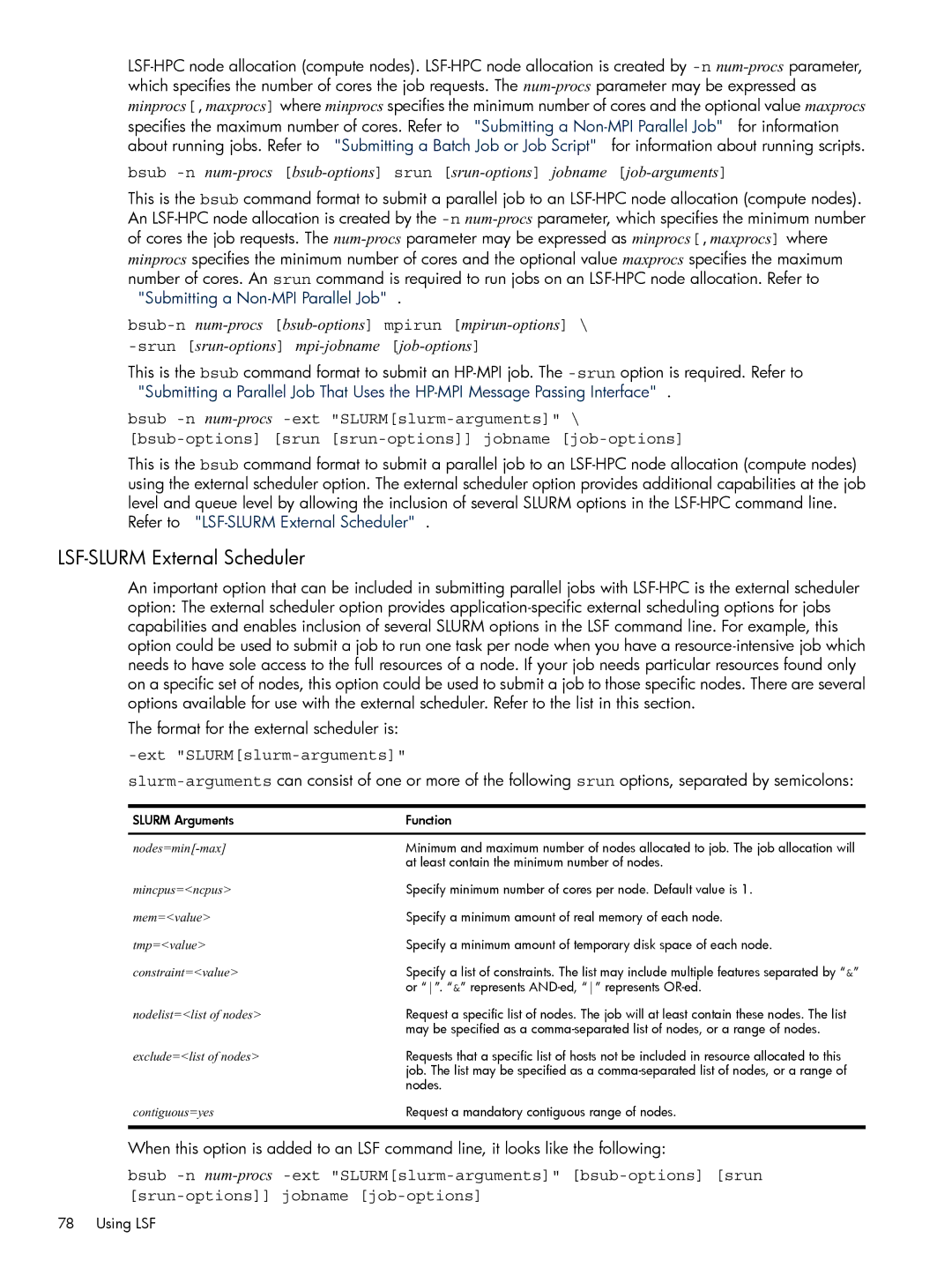

LSF-SLURM External Scheduler

An important option that can be included in submitting parallel jobs with

The format for the external scheduler is:

-ext "SLURM[slurm-arguments]"

SLURM Arguments | Function |

| Minimum and maximum number of nodes allocated to job. The job allocation will |

| at least contain the minimum number of nodes. |

mincpus=<ncpus> | Specify minimum number of cores per node. Default value is 1. |

mem=<value> | Specify a minimum amount of real memory of each node. |

tmp=<value> | Specify a minimum amount of temporary disk space of each node. |

constraint=<value> | Specify a list of constraints. The list may include multiple features separated by “&” |

| or “”. “&” represents |

nodelist=<list of nodes> | Request a specific list of nodes. The job will at least contain these nodes. The list |

| may be specified as a |

exclude=<list of nodes> | Requests that a specific list of hosts not be included in resource allocated to this |

| job. The list may be specified as a |

| nodes. |

contiguous=yes | Request a mandatory contiguous range of nodes. |

When this option is added to an LSF command line, it looks like the following:

bsub

[srun-options]] jobname [job-options]

78 Using LSF