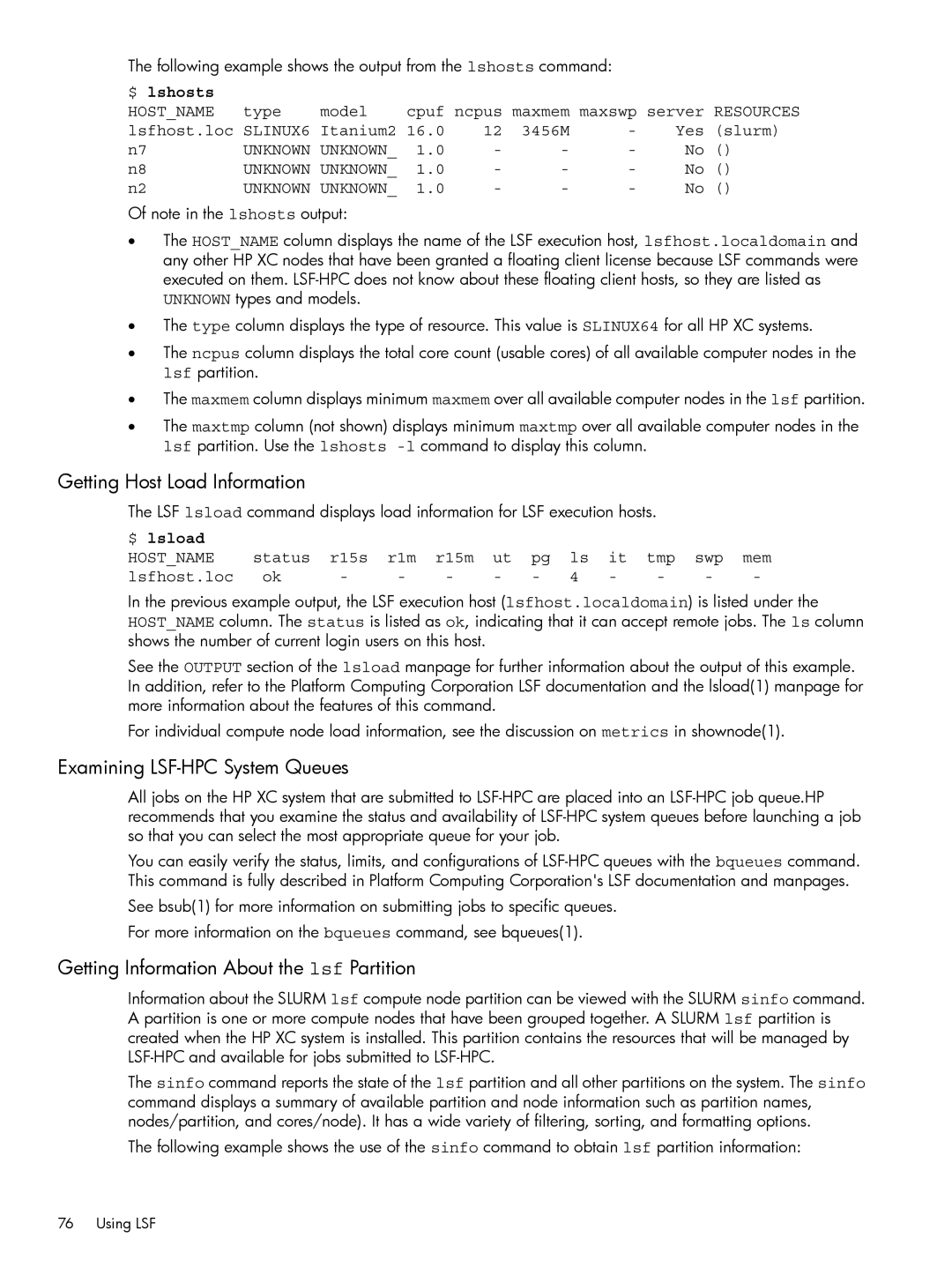

The following example shows the output from the lshosts command:

$ lshosts |

|

|

|

|

|

|

|

|

HOST_NAME | type | model | cpuf ncpus maxmem maxswp server | RESOURCES | ||||

lsfhost.loc | SLINUX6 | Itanium2 | 16.0 | 12 | 3456M | - | Yes | (slurm) |

n7 | UNKNOWN | UNKNOWN_ | 1.0 | - | - | - | No | () |

n8 | UNKNOWN | UNKNOWN_ | 1.0 | - | - | - | No | () |

n2 | UNKNOWN | UNKNOWN_ | 1.0 | - | - | - | No | () |

Of note in the lshosts output:

•The HOST_NAME column displays the name of the LSF execution host, lsfhost.localdomain and any other HP XC nodes that have been granted a floating client license because LSF commands were executed on them.

•The type column displays the type of resource. This value is SLINUX64 for all HP XC systems.

•The ncpus column displays the total core count (usable cores) of all available computer nodes in the lsf partition.

•The maxmem column displays minimum maxmem over all available computer nodes in the lsf partition.

•The maxtmp column (not shown) displays minimum maxtmp over all available computer nodes in the lsf partition. Use the lshosts

Getting Host Load Information

The LSF lsload command displays load information for LSF execution hosts.

$ lsload |

|

|

|

|

|

|

|

|

|

|

|

HOST_NAME | status | r15s | r1m | r15m | ut | pg | ls | it | tmp | swp | mem |

lsfhost.loc | ok | - | - | - | - | - | 4 | - | - | - | - |

In the previous example output, the LSF execution host (lsfhost.localdomain) is listed under the HOST_NAME column. The status is listed as ok, indicating that it can accept remote jobs. The ls column shows the number of current login users on this host.

See the OUTPUT section of the lsload manpage for further information about the output of this example. In addition, refer to the Platform Computing Corporation LSF documentation and the lsload(1) manpage for more information about the features of this command.

For individual compute node load information, see the discussion on metrics in shownode(1).

Examining LSF-HPC System Queues

All jobs on the HP XC system that are submitted to

You can easily verify the status, limits, and configurations of

See bsub(1) for more information on submitting jobs to specific queues.

For more information on the bqueues command, see bqueues(1).

Getting Information About the lsf Partition

Information about the SLURM lsf compute node partition can be viewed with the SLURM sinfo command. A partition is one or more compute nodes that have been grouped together. A SLURM lsf partition is created when the HP XC system is installed. This partition contains the resources that will be managed by

The sinfo command reports the state of the lsf partition and all other partitions on the system. The sinfo command displays a summary of available partition and node information such as partition names, nodes/partition, and cores/node). It has a wide variety of filtering, sorting, and formatting options.

The following example shows the use of the sinfo command to obtain lsf partition information:

76 Using LSF