•Use the bjobs command to monitor job status in

•Use the bqueues command to list the configured job queues in

How LSF-HPC and SLURM Launch and Manage a Job

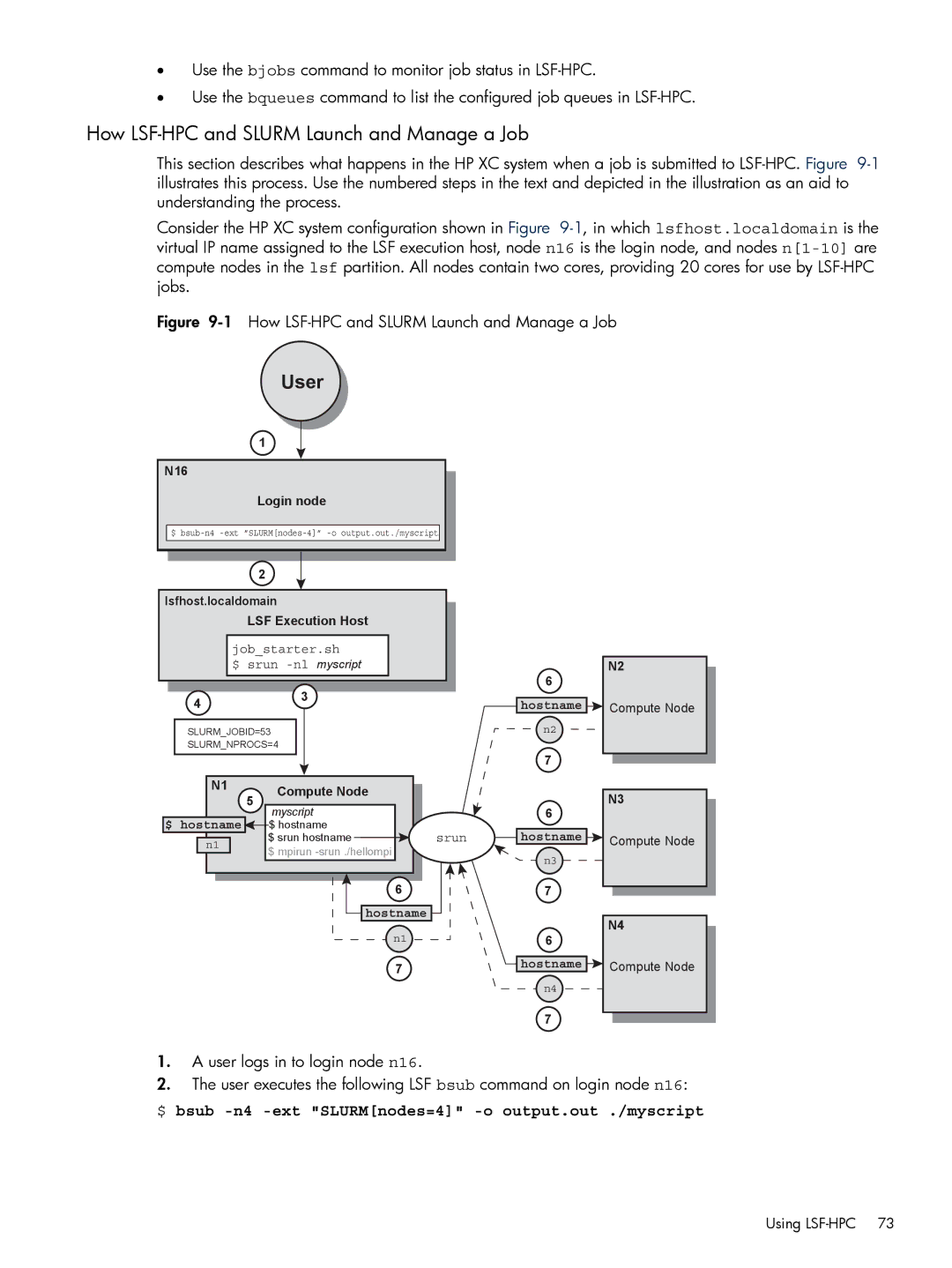

This section describes what happens in the HP XC system when a job is submitted to

Consider the HP XC system configuration shown in Figure

Figure 9-1 How LSF-HPC and SLURM Launch and Manage a Job

User

1

N16

N166

Login node

$

2

lsfhost.localdomain

LSF Execution Host

job_starter.sh

$srun

4 | 3 |

|

SLURM_JOBID=53

SLURM_NPROCS=4

N2

6

hostname ![]() Compute Node

Compute Node

n2

7

| N1 | 5 | Compute Node |

|

| N3 |

|

|

|

| |||

|

| myscript |

| 6 | ||

|

|

|

|

| ||

$ | hostname |

| $ hostname |

| hostname |

|

| n1 |

| $ srun hostname | srun | Compute Node | |

|

|

| $ mpirun |

| n3 |

|

|

|

|

|

|

|

67

hostname

N4

n16

7hostname ![]() Compute Node n4

Compute Node n4

7

1.A user logs in to login node n16.

2.The user executes the following LSF bsub command on login node n16:

$ bsub -n4 -ext "SLURM[nodes=4]" -o output.out ./myscript

Using