Example

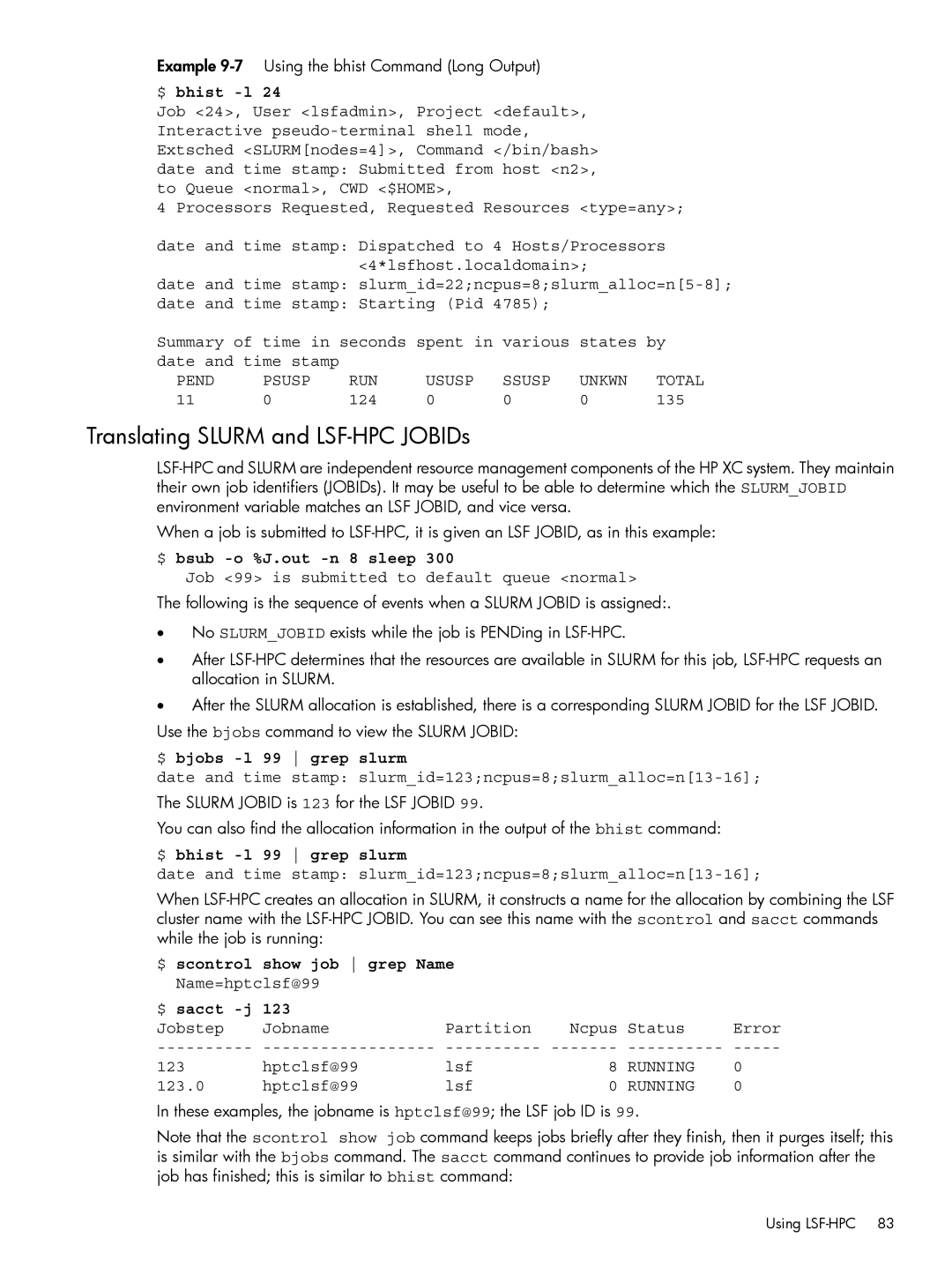

$ bhist -l 24

Job <24>, User <lsfadmin>, Project <default>, Interactive

4 Processors Requested, Requested Resources <type=any>;

date and time stamp: Dispatched to 4 Hosts/Processors <4*lsfhost.localdomain>;

date and time stamp:

date and time stamp: Starting (Pid 4785);

Summary of time in seconds spent in various | states by | |||||

date and time stamp |

|

|

|

|

| |

PEND | PSUSP | RUN | USUSP | SSUSP | UNKWN | TOTAL |

11 | 0 | 124 | 0 | 0 | 0 | 135 |

Translating SLURM and LSF-HPC JOBIDs

When a job is submitted to

$ bsub -o %J.out -n 8 sleep 300

Job <99> is submitted to default queue <normal>

The following is the sequence of events when a SLURM JOBID is assigned:.

•No SLURM_JOBID exists while the job is PENDing in

•After

•After the SLURM allocation is established, there is a corresponding SLURM JOBID for the LSF JOBID. Use the bjobs command to view the SLURM JOBID:

$ bjobs -l 99 grep slurm

date and time stamp: slurm_id=123;ncpus=8;slurm_alloc=n[13-16];

The SLURM JOBID is 123 for the LSF JOBID 99.

You can also find the allocation information in the output of the bhist command:

$ bhist -l 99 grep slurm

date and time stamp:

When

$ scontrol show job grep Name

Name=hptclsf@99

$ sacct |

|

|

|

| |

Jobstep | Jobname | Partition | Ncpus | Status | Error |

123 | hptclsf@99 | lsf | 8 | RUNNING | 0 |

123.0 | hptclsf@99 | lsf | 0 | RUNNING | 0 |

In these examples, the jobname is hptclsf@99; the LSF job ID is 99.

Note that the scontrol show job command keeps jobs briefly after they finish, then it purges itself; this is similar with the bjobs command. The sacct command continues to provide job information after the job has finished; this is similar to bhist command:

Using