Getting Information About Jobs

There are several ways you can get information about a specific job after it has been submitted to

The following LSF commands are described in this section:

bjobs "Examining the Status of a Job"

bhist "Viewing the Historical Information for a Job"

Getting Job Allocation Information

Before a job runs,

A job allocation information string looks like the following:

slurm_id=slurm_jobid;ncpus=slurm_nprocs;slurm_alloc=node_list

This allocation string has the following values:

slurm_id | SLURM_JOBID environment variable. This is SLURM allocation ID (Associates |

| job with SLURM allocated resources.) |

ncpus | SLURM_NPROCS environment variable. This the actual number of allocated cores. Under |

| |

slurm_alloc | Allocated node list (comma separated). |

When

Job Allocation Information for a Running Job

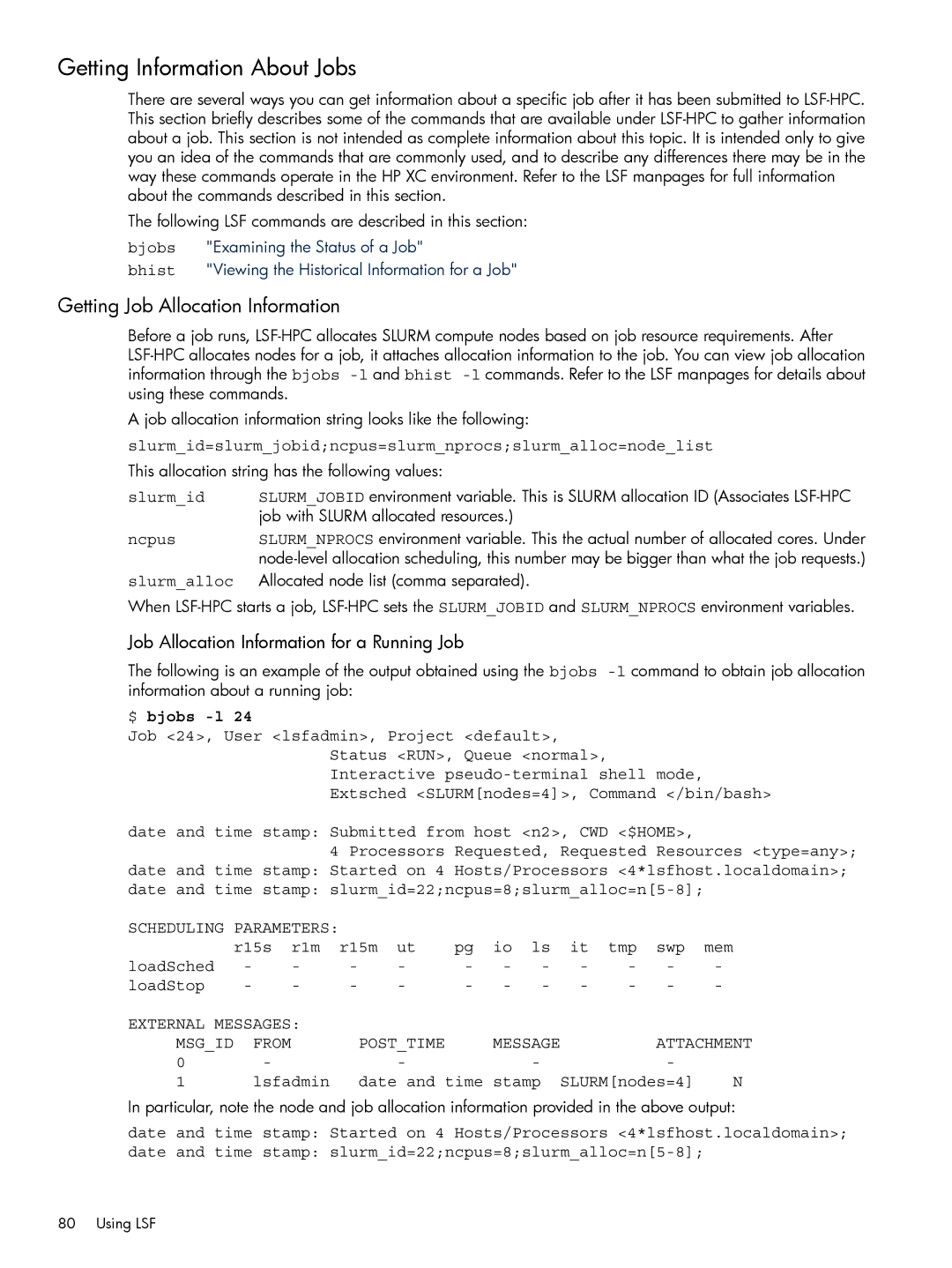

The following is an example of the output obtained using the bjobs

$ bjobs -l 24

Job <24>, User <lsfadmin>, Project <default>, Status <RUN>, Queue <normal>, Interactive

date and time stamp: Submitted from host <n2>, CWD <$HOME>,

4 Processors Requested, Requested Resources <type=any>; date and time stamp: Started on 4 Hosts/Processors <4*lsfhost.localdomain>; date and time stamp:

SCHEDULING PARAMETERS: |

|

|

|

|

|

|

|

|

| ||

| r15s | r1m | r15m | ut | pg | io | ls | it | tmp | swp | mem |

loadSched | - | - | - | - | - | - | - | - | - | - | - |

loadStop | - | - | - | - | - | - | - | - | - | - | - |

EXTERNAL MESSAGES: |

|

|

|

|

|

|

|

|

| ||

MSG_ID | FROM |

| POST_TIME |

| MESSAGE |

|

| ATTACHMENT | |||

0 | - |

|

| - |

|

| - |

|

| - |

|

1 | lsfadmin | date and time | stamp | SLURM[nodes=4] | N | ||||||

In particular, note the node and job allocation information provided in the above output:

date and time stamp: Started on 4 Hosts/Processors <4*lsfhost.localdomain>;

date and time stamp:

80 Using LSF