Preemption

Determining the LSF Execution Host

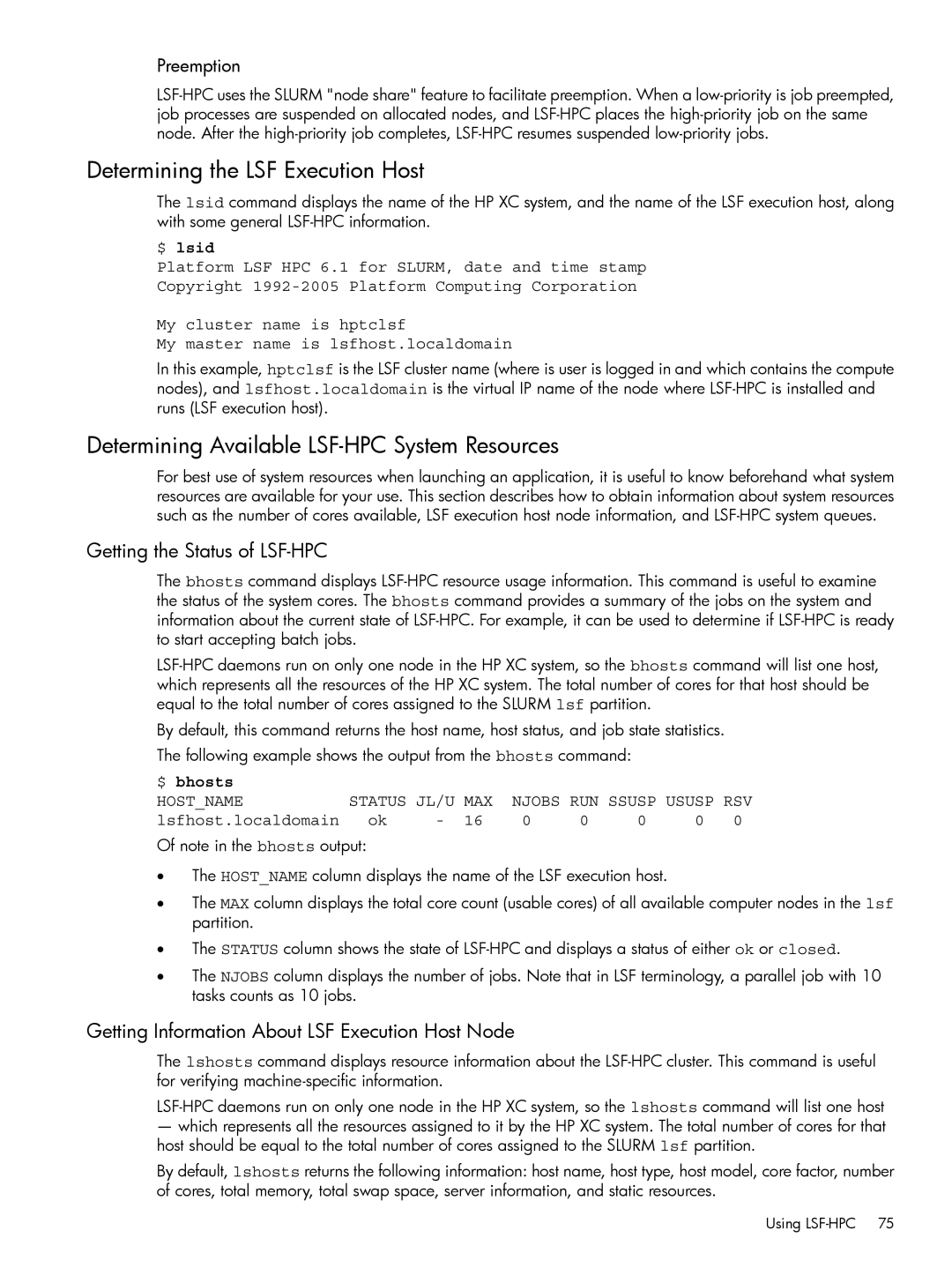

The lsid command displays the name of the HP XC system, and the name of the LSF execution host, along with some general

$ lsid

Platform LSF HPC 6.1 for SLURM, date and time stamp

Copyright

My cluster name is hptclsf

My master name is lsfhost.localdomain

In this example, hptclsf is the LSF cluster name (where is user is logged in and which contains the compute nodes), and lsfhost.localdomain is the virtual IP name of the node where

Determining Available LSF-HPC System Resources

For best use of system resources when launching an application, it is useful to know beforehand what system resources are available for your use. This section describes how to obtain information about system resources such as the number of cores available, LSF execution host node information, and

Getting the Status of LSF-HPC

The bhosts command displays

By default, this command returns the host name, host status, and job state statistics.

The following example shows the output from the bhosts command:

$ bhosts |

|

|

|

|

|

|

|

|

HOST_NAME | STATUS JL/U | MAX | NJOBS RUN SSUSP USUSP RSV | |||||

lsfhost.localdomain | ok | - | 16 | 0 | 0 | 0 | 0 | 0 |

Of note in the bhosts output:

•The HOST_NAME column displays the name of the LSF execution host.

•The MAX column displays the total core count (usable cores) of all available computer nodes in the lsf partition.

•The STATUS column shows the state of

•The NJOBS column displays the number of jobs. Note that in LSF terminology, a parallel job with 10 tasks counts as 10 jobs.

Getting Information About LSF Execution Host Node

The lshosts command displays resource information about the

—which represents all the resources assigned to it by the HP XC system. The total number of cores for that host should be equal to the total number of cores assigned to the SLURM lsf partition.

By default, lshosts returns the following information: host name, host type, host model, core factor, number of cores, total memory, total swap space, server information, and static resources.

Using