Software Optimization Guide for AMD64 Processors | 25112 Rev. 3.06 September 2005 |

•The size of each row is an integer number of cache lines.

A set of eight rows of A is dotted in pairs of four with BT, and prefetches in each iteration of the Ctr_row_num

•The cache line (or set of eight

•One quarter of the next row of BT.

Including the prefetch to the rows of BT increases performance by about 16%. Prefetching the elements of CT increases performance by an additional 3% or so.

Follow these guidelines when working with

•Arrange your code with enough instructions between prefetches so that there is adequate time for the data to be retrieved.

•Make sure the data that you are prefetching fits into the L1 data cache and does not displace other data that is also being operated upon. For instance, choosing a larger matrix size might displace A if all three matrices cannot fit into the

•Operate on data in chunks that are integer multiples of cache lines.

Examples

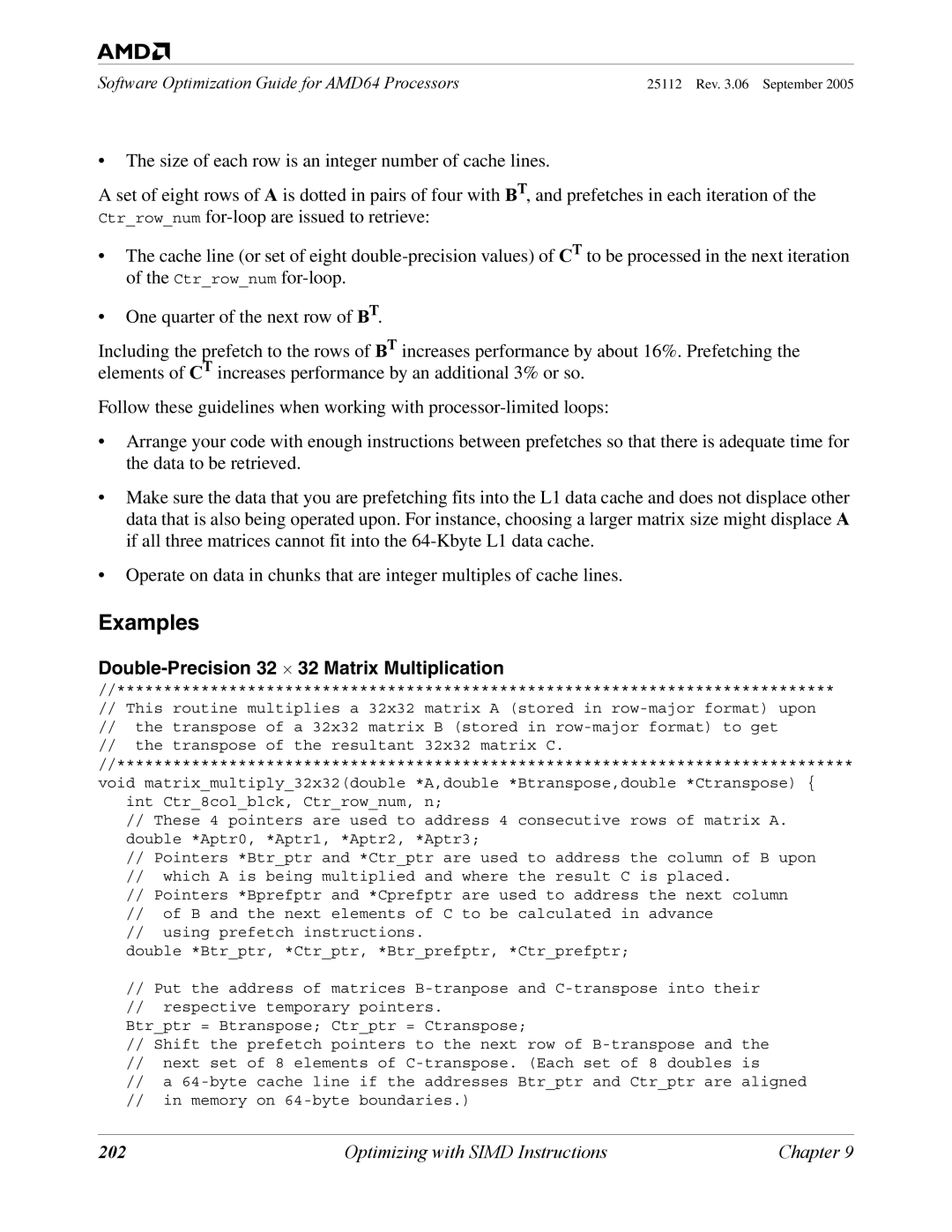

Double-Precision 32 ⋅ 32 Matrix Multiplication

//*****************************************************************************

//This routine multiplies a 32x32 matrix A (stored in

//the transpose of a 32x32 matrix B (stored in

//the transpose of the resultant 32x32 matrix C. //******************************************************************************* void matrix_multiply_32x32(double *A,double *Btranspose,double *Ctranspose) {

int Ctr_8col_blck, Ctr_row_num, n;

//These 4 pointers are used to address 4 consecutive rows of matrix A. double *Aptr0, *Aptr1, *Aptr2, *Aptr3;

//Pointers *Btr_ptr and *Ctr_ptr are used to address the column of B upon

//which A is being multiplied and where the result C is placed.

//Pointers *Bprefptr and *Cprefptr are used to address the next column

//of B and the next elements of C to be calculated in advance

//using prefetch instructions.

double *Btr_ptr, *Ctr_ptr, *Btr_prefptr, *Ctr_prefptr;

//Put the address of matrices

//respective temporary pointers.

Btr_ptr = Btranspose; Ctr_ptr = Ctranspose;

//Shift the prefetch pointers to the next row of

//next set of 8 elements of

//a

//in memory on

202 | Optimizing with SIMD Instructions | Chapter 9 |