Software Optimization Guide for AMD64 Processors | 25112 Rev. 3.06 September 2005 |

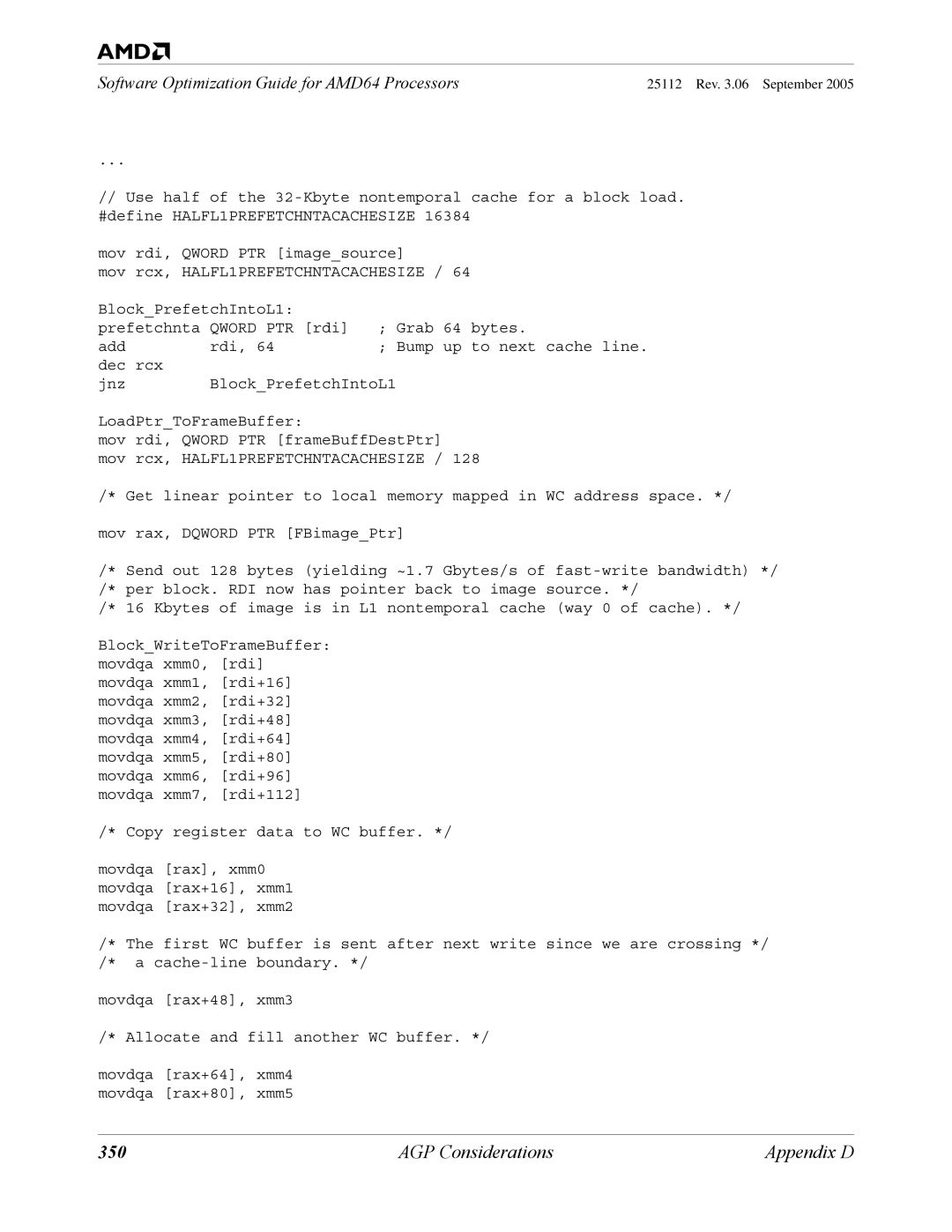

...

//Use half of the

mov rdi, QWORD PTR [image_source]

mov rcx, HALFL1PREFETCHNTACACHESIZE / 64

Block_PrefetchIntoL1: |

|

| |

prefetchnta | QWORD PTR [rdi] | ; | Grab 64 bytes. |

add | rdi, 64 | ; | Bump up to next cache line. |

dec rcx |

|

|

|

jnz | Block_PrefetchIntoL1 | ||

LoadPtr_ToFrameBuffer:

mov rdi, QWORD PTR [frameBuffDestPtr]

mov rcx, HALFL1PREFETCHNTACACHESIZE / 128

/* Get linear pointer to local memory mapped in WC address space. */

mov rax, DQWORD PTR [FBimage_Ptr]

/* Send out 128 bytes (yielding ~1.7 Gbytes/s of

/* 16 Kbytes of image is in L1 nontemporal cache (way 0 of cache). */

Block_WriteToFrameBuffer: movdqa xmm0, [rdi] movdqa xmm1, [rdi+16] movdqa xmm2, [rdi+32] movdqa xmm3, [rdi+48] movdqa xmm4, [rdi+64] movdqa xmm5, [rdi+80] movdqa xmm6, [rdi+96] movdqa xmm7, [rdi+112]

/* Copy register data to WC buffer. */

movdqa [rax], xmm0 movdqa [rax+16], xmm1 movdqa [rax+32], xmm2

/* The first WC buffer is sent after next write since we are crossing */ /* a

movdqa [rax+48], xmm3

/* Allocate and fill another WC buffer. */

movdqa [rax+64], xmm4 movdqa [rax+80], xmm5

350 | AGP Considerations | Appendix D |