$ sacct |

|

|

|

| |

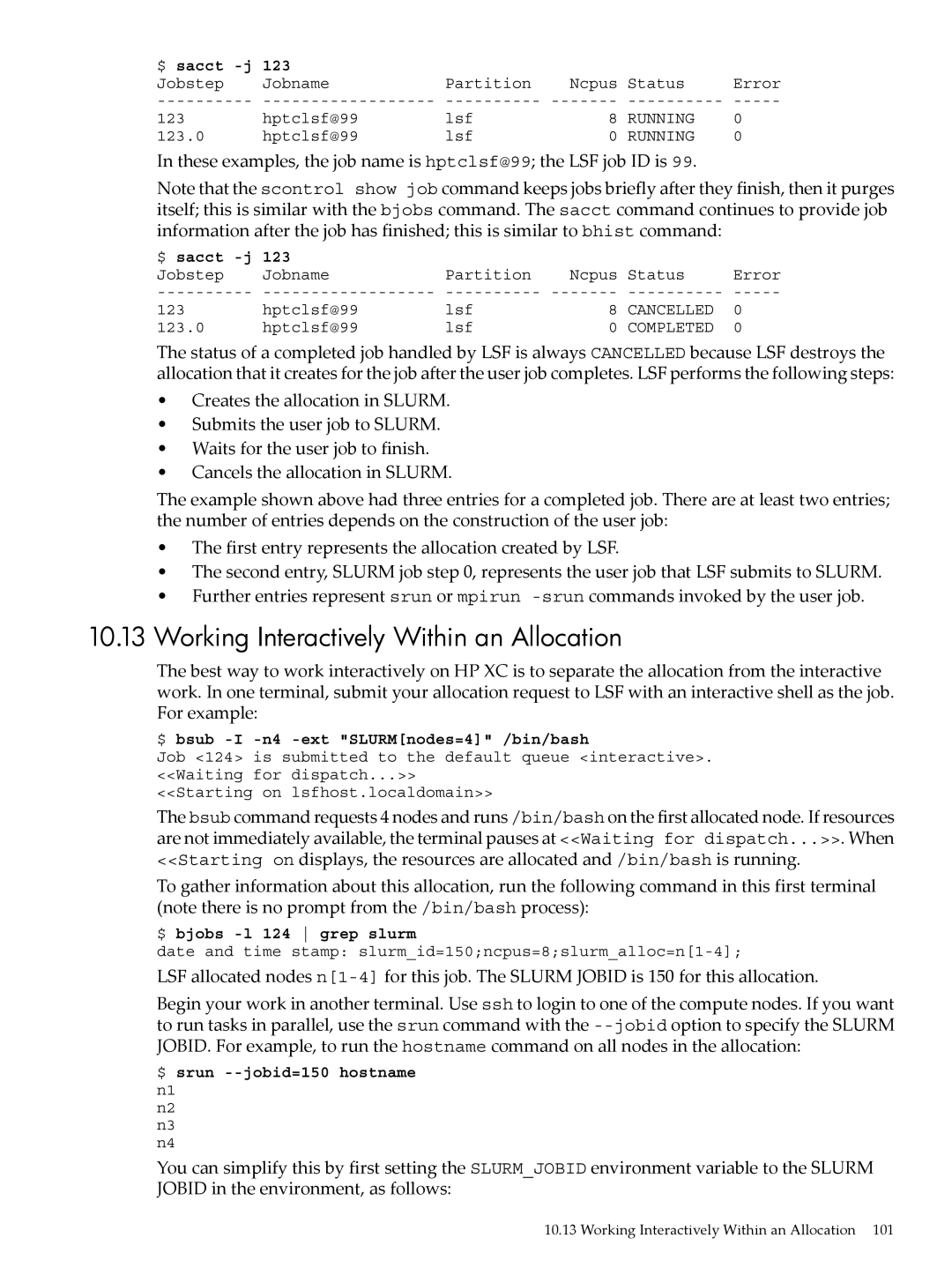

Jobstep | Jobname | Partition | Ncpus | Status | Error |

123 | hptclsf@99 | lsf | 8 | RUNNING | 0 |

123.0 | hptclsf@99 | lsf | 0 | RUNNING | 0 |

In these examples, the job name is hptclsf@99; the LSF job ID is 99.

Note that the scontrol show job command keeps jobs briefly after they finish, then it purges itself; this is similar with the bjobs command. The sacct command continues to provide job information after the job has finished; this is similar to bhist command:

$ sacct |

|

|

|

| |

Jobstep | Jobname | Partition | Ncpus | Status | Error |

123 | hptclsf@99 | lsf | 8 | CANCELLED | 0 |

123.0 | hptclsf@99 | lsf | 0 | COMPLETED | 0 |

The status of a completed job handled by LSF is always CANCELLED because LSF destroys the allocation that it creates for the job after the user job completes. LSF performs the following steps:

•Creates the allocation in SLURM.

•Submits the user job to SLURM.

•Waits for the user job to finish.

•Cancels the allocation in SLURM.

The example shown above had three entries for a completed job. There are at least two entries; the number of entries depends on the construction of the user job:

•The first entry represents the allocation created by LSF.

•The second entry, SLURM job step 0, represents the user job that LSF submits to SLURM.

•Further entries represent srun or mpirun

10.13Working Interactively Within an Allocation

The best way to work interactively on HP XC is to separate the allocation from the interactive work. In one terminal, submit your allocation request to LSF with an interactive shell as the job. For example:

$ bsub -I -n4 -ext "SLURM[nodes=4]" /bin/bash

Job <124> is submitted to the default queue <interactive>. <<Waiting for dispatch...>>

<<Starting on lsfhost.localdomain>>

The bsub command requests 4 nodes and runs /bin/bash on the first allocated node. If resources are not immediately available, the terminal pauses at <<Waiting for dispatch...>>. When <<Starting on displays, the resources are allocated and /bin/bash is running.

To gather information about this allocation, run the following command in this first terminal (note there is no prompt from the /bin/bash process):

$ bjobs -l 124 grep slurm

date and time stamp:

LSF allocated nodes

Begin your work in another terminal. Use ssh to login to one of the compute nodes. If you want to run tasks in parallel, use the srun command with the

$ srun --jobid=150 hostname n1

n2

n3

n4

You can simplify this by first setting the SLURM_JOBID environment variable to the SLURM JOBID in the environment, as follows:

10.13 Working Interactively Within an Allocation 101