variable that was set by LSF; this environment variable is equivalent to the number provided by the

Any additional SLURM srun options are job specific, not

The

Example

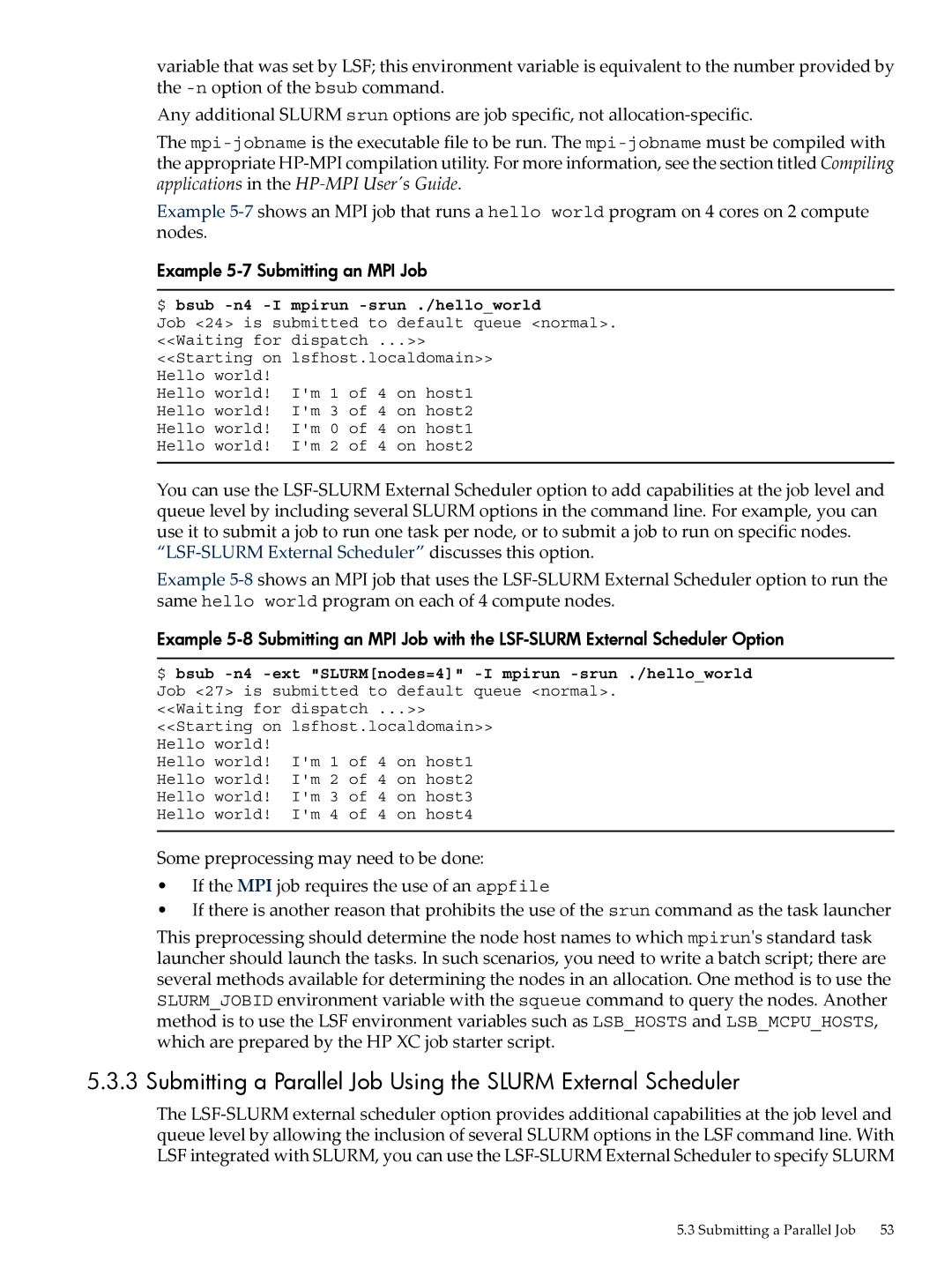

Example 5-7 Submitting an MPI Job

$ bsub -n4 -I mpirun -srun ./hello_world

Job <24> is submitted to default queue <normal>. <<Waiting for dispatch ...>>

<<Starting on lsfhost.localdomain>> Hello world!

Hello world! I'm 1 of 4 on host1 Hello world! I'm 3 of 4 on host2 Hello world! I'm 0 of 4 on host1 Hello world! I'm 2 of 4 on host2

You can use the

“LSF-SLURM External Scheduler” discusses this option.

Example

Example

$ bsub

<<Waiting for dispatch ...>> <<Starting on lsfhost.localdomain>> Hello world!

Hello world! I'm 1 of 4 on host1 Hello world! I'm 2 of 4 on host2 Hello world! I'm 3 of 4 on host3 Hello world! I'm 4 of 4 on host4

Some preprocessing may need to be done:

•If the MPI job requires the use of an appfile

•If there is another reason that prohibits the use of the srun command as the task launcher

This preprocessing should determine the node host names to which mpirun's standard task launcher should launch the tasks. In such scenarios, you need to write a batch script; there are several methods available for determining the nodes in an allocation. One method is to use the SLURM_JOBID environment variable with the squeue command to query the nodes. Another method is to use the LSF environment variables such as LSB_HOSTS and LSB_MCPU_HOSTS, which are prepared by the HP XC job starter script.

5.3.3 Submitting a Parallel Job Using the SLURM External Scheduler

The

5.3 Submitting a Parallel Job | 53 |