options that specify the minimum number of nodes required for the job, specific nodes for the job, and so on.

Note:

The SLURM external scheduler is a

The format of this option is shown here:

The bsub command format to submit a parallel job to an LSF allocation of compute nodes using the external scheduler option is as follows:

bsub

] [jobname]

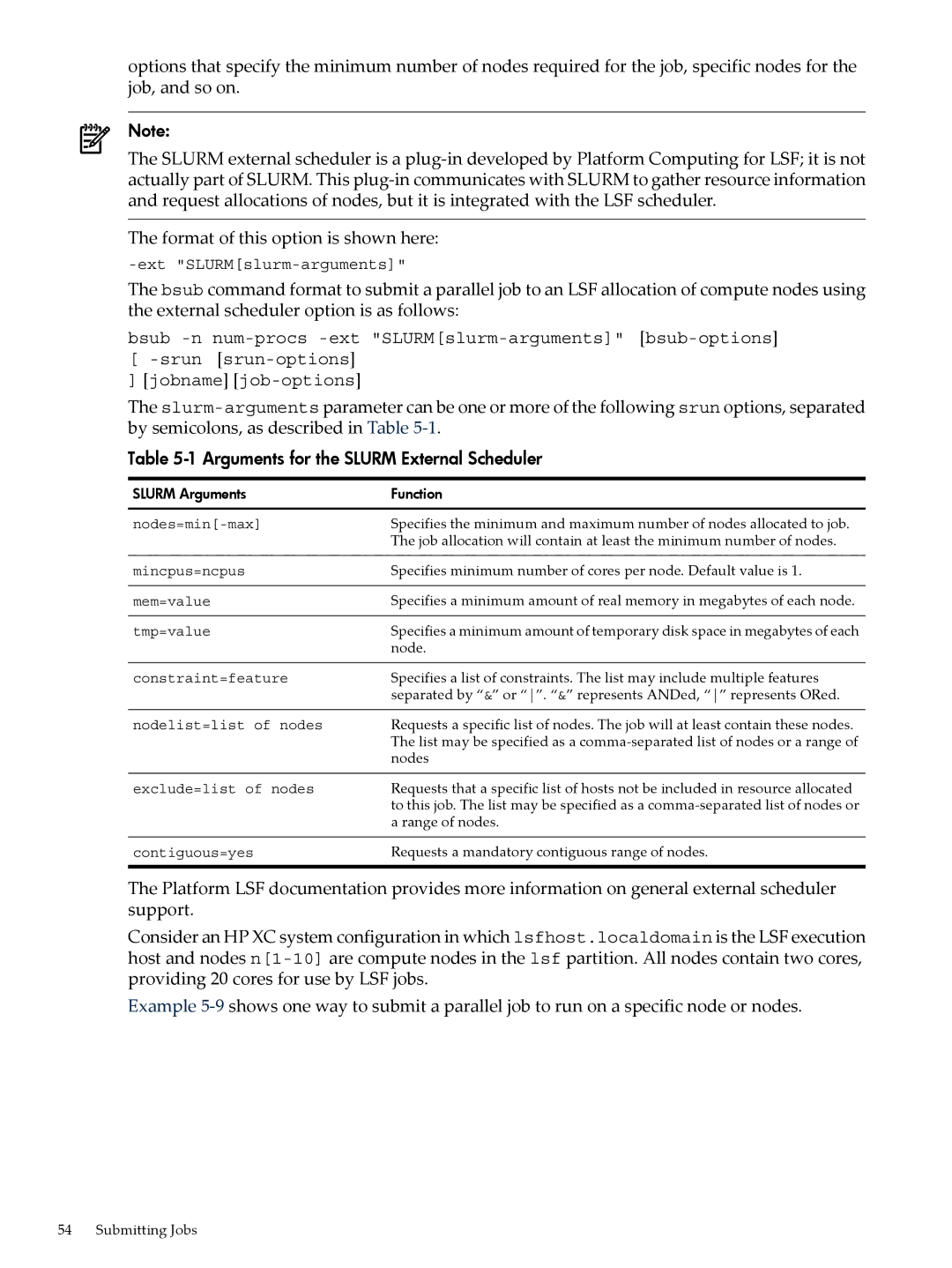

The

Table 5-1 Arguments for the SLURM External Scheduler

SLURM Arguments | Function |

Specifies the minimum and maximum number of nodes allocated to job. | |

| The job allocation will contain at least the minimum number of nodes. |

mincpus=ncpus | Specifies minimum number of cores per node. Default value is 1. |

mem=value | Specifies a minimum amount of real memory in megabytes of each node. |

tmp=value | Specifies a minimum amount of temporary disk space in megabytes of each |

| node. |

constraint=feature | Specifies a list of constraints. The list may include multiple features |

| separated by “&” or “”. “&” represents ANDed, “” represents ORed. |

nodelist=list of nodes | Requests a specific list of nodes. The job will at least contain these nodes. |

| The list may be specified as a |

| nodes |

exclude=list of nodes | Requests that a specific list of hosts not be included in resource allocated |

| to this job. The list may be specified as a |

| a range of nodes. |

contiguous=yes | Requests a mandatory contiguous range of nodes. |

The Platform LSF documentation provides more information on general external scheduler support.

Consider an HP XC system configuration in which lsfhost.localdomain is the LSF execution host and nodes

Example

54 Submitting Jobs