Agilent 34401A ½ Digit Multimeter

U T I O N WA RN I N G

Safety Information

Protection Limits

Additional Notices

Declaration of Conformity

Flexible System Features

Convenient Bench-Top Features

Menu Operation keys

Front Panel at a Glance

TRIGger Menu

Front-Panel Menu at a Glance

SYStem Menu

Input / Output Menu

Key as you

Adrs Rmt Man Trig Hold Mem Ratio Math Error Rear Shift 4W

Display Annunciators

Use the front-panel Input / Output Menu to

Rear Panel at a Glance

This Book

Contents

Contents

Application Programs

Error Messages

Remote Interface Reference

Index Declaration of Conformity

Specifications

Measurement Tutorial

Quick Start

Quick Start

To Prepare the Multimeter for Use

Connect the power cord and turn on the multimeter

To Prepare the Multimeter for Use

Check the list of supplied items

Verify the power-line voltage setting

Verify that there is ac power to the multimeter

Verify that the power-line fuse is good

If the Multimeter Does Not Turn On

Replace the fuse-holder assembly in the rear panel

Bench-top viewing positions Carrying position

To Adjust the Carrying Handle

To Adjust the Carrying Handle

To Measure Voltage

To Measure Voltage

To Measure Resistance

To Measure Current

To Measure Current

To Measure Frequency or Period

To Test Continuity

To Test Continuity

To Check Diodes

To Select a Range

To Select a Range

To Set the Resolution

To Set the Resolution

Front-Panel Display Formats

To Rack Mount the Multimeter

To Rack Mount the Multimeter

Quick Start

Front-Panel Menu Operation

Front-Panel Menu Operation

Front-Panel Menu Reference

Front-Panel Menu Reference

Secured

Front-Panel Menu Reference SYStem Menu

Front-Panel Menu Tutorial

Front-Panel Menu Tutorial

Menu Bottom You pressed

Messages Displayed During Menu USE

Move across to the SYS Menu choice on this level

Turn on the menu

Save the change and turn off the menu

Move across to the Beep command on the commands level

Move down a level to the Beep parameter choices

Move across to the on choice

Use menu recall to return to the Beep command

Move down to the Beep parameter choices

Math Menu

Increment the first digit until 2 is displayed

Move down to edit the Null Value parameter

Make the number negative

Increase the displayed number by a factor

To Turn Off the Comma Separator

Move down a level and then across to the Comma command

To Turn Off the Comma Separator

Move across to the SYS Menu choice on the menus level

Result = reading null value

To Make Null Relative Measurements

To Make Null Relative Measurements

To Store Minimum and Maximum Readings

To Store Minimum and Maximum Readings

DB = reading in dBm relative value in dBm

To Make dB Measurements

To Make dB Measurements

DBm = 10 ⋅ Log10 reading2 / reference resistance / 1 mW

To Make dBm Measurements

To Make dBm Measurements

To Trigger the Multimeter

To Trigger the Multimeter

To Use Reading Hold

To Use Reading Hold

To Make dcvdcv Ratio Measurements

To Make dcvdcv Ratio Measurements

Move down to the parameter level

Move down a level and then across to the Ratio Func command

Meas Menu

Move down to a level to the Rdgs Store command

Select the single trigger mode

To Use Reading Memory

To Use Reading Memory

This takes you to the Saved Rdgs command in the SYS Menu

Turn off the menu

Move down a level to view the first stored reading

Move across to view the two remaining stored readings

Features and Functions

Math Operations, starting on Triggering, starting on

Features and Functions

AC Signal Filter

Measurement Configuration

Measurement Configuration

Applies to ac voltage and ac current measurements only

See also To Test Continuity, on

Continuity Threshold Resistance

Input R Meas Menu

DC Input Resistance

Resolution Choices Integration Time

Resolution

This is the 10 Vdc range, 51⁄2 digits are displayed

See also To Set the Resolution, on

Resolution setting. The default mode is 5 digits slow

Remote Interface Operation You can set the resolution using

Following commands

Integration Time

Integration Time Resolution

Default 51⁄2 digits, or 1 second 61⁄2 digits

Front / Rear Input Terminal Switching

Remote Interface Operation

Returns Fron or Rear

Autozero

Resolution Choices Integration Time Autozero

Front-panel settings

Measurement Configuration Autozero

Ranging

Autoranging or manual ranging. For frequency and period

Remote Interface Operation You can set the range using any

Input voltage, not its frequency

See also To Select a Range, on

Freq Per Cont Diode Ratio Null Min-Max DBm Limit

Math Operations

Math Operations

See also To Store Minimum and Maximum Readings, on

Min-Max Operation

MIN-MAXMATH Menu

Null Relative Operation

See also To Make Null Relative Measurements, on

Math Operations Null Relative

Entering a null value also turns on the null function

See also To Make dB Measurements, on

DB Measurements

DB REL Math Menu

See also To Make dBm Measurements, on

DBm Measurements

DBm REF R Math Menu

Enable or disable limit test

Limit Testing

Set the upper limit

Set the lower limit

More information

Limit testing

Reading that exceeds the upper or lower limit

RS-232 interface circuitry may be damaged

Triggering

Triggering

Trigger

State

Wait-for

Front-panel

Key is disabled when in remote

Trigger Source Choices

See also External Trigger Terminal, on

Key is disabled when in remote Remote Interface Operation

TRIGgerSOURce IMMediate

Halting a Measurement in Progress

Wait-for-Trigger State

Front-Panel Operation

Number of Samples

Number of Triggers

Trigger Delay

Auto Delay

Trigger Delay

Zero Delay

Resistance 2-wire and 4-wire

Automatic Trigger Delays

DC Voltage and DC Current for all ranges

AC Voltage and AC Current for all ranges

See also To Use Reading Hold, on

Reading Hold

Read Hold Trig Menu

External Trigger Terminal

Voltmeter Complete Terminal

Reading Memory

System-Related Operations

System-Related Operations

SYS Menu

Error SYS Menu

Error Conditions

Returns 0 if the self-test is successful, or 1 if it fails

Self-Test

Test SYS Menu

Display Control

Disable/enable the display

Display SYS Menu

Display the string enclosed in quotes

Disable/enable beeper state

Issue a single beep immediately

Beeper Control

Beep SYS Menu

Firmware Revision Query

Comma Separators

Comma SYS Menu

See also To Turn Off the Comma Separator, on

You cannot query the Scpi version from the front panel

Scpi Language Version Query

Remote Interface Configuration

Remote Interface Configuration

Gpib Address

Gpib address can be set only from the front-panel

Remote interface can be set only from the front-panel

Remote Interface Selection

See also To Select the Remote Interface, on

Gpib RS-232

Parity I/O Menu

Baud Rate Selection RS-232

Parity Selection RS-232

Language I/O Menu

Programming Language Selection

Calibration Security

Calibration Overview

Calibration Overview

Characters

Secured CAL Menu

Calibration Security

Unsecured CAL Menu

Enter new code

Calibration Count

Unsecure with old code

Calibration Message

To Replace the Power-Line Fuse

Operator Maintenance

Operator Maintenance

To Replace the Current Input Fuses

Power-On and Reset State

Power-On and Reset State

102

Remote Interface Reference

Remote Interface Reference

Command Summary, starting on

Command Summary

Command Summary

106

107

CALCulate

See page 124 for more information

See page 132 for more information

See page 127 for more information

110

111

Command MEASure? and CONFigure Setting

MEASure? and CONFigure Preset States

Simplified Programming Overview

Simplified Programming Overview

Using the CONFigure Command

Using the MEASure? Command

You must specify a range to use the resolution parameter

Using the range and resolution Parameters

Using the READ? Command

To avoid this, do not send a query command without reading

Using the INITiate and FETCh? Commands

Before sending the second query command

MEASure?

Simplified Programming Overview CONFigure

MEASure? and CONFigure Commands

MEASure? and CONFigure Commands

MEASureDIODe?

MEASureCONTinuity?

CONFigureCURRentDC rangeMINMAXDEF,resolutionMINMAXDEF

CONFigure?

CONFigureCONTinuity

CONFigureDIODe

Measurement Configuration Commands

Measurement Configuration Commands

FunctionRESolution? MINimumMAXimum

FunctionRESolution resolutionMINimumMAXimum

FREQuencyAPERture? MINimumMAXimum

PERiodAPERture? MINimumMAXimum

SENSeDETectorBANDwidth? MINimumMAXimum

SENSeDETectorBANDwidth 320200MINimumMAXimum

SENSeZEROAUTO Offonceon

SENSeZEROAUTO?

Math Operation Commands

Math Operation Commands

CALCulateAVERageMAXimum?

CALCulateAVERageMINimum?

CALCulateAVERageAVERage?

CALCulateAVERageCOUNt?

DATAFEED?

See also Triggering, starting on page 71 in chapter

128

129

READ?

Triggering Commands

Triggering Commands

TRIGgerDELay? MINimumMAXimum

TRIGgerDELay secondsMINimumMAXimum

TRIGgerDELayAUTO Offon

TRIGgerDELayAUTO?

System-Related Commands

System-Related Commands

IDN?

RST

TST?

What is an Enable Register?

What is an Event Register?

Scpi Status Model

Scpi Status Model

Scpi Status System

Bit Decimal Definition Value

Status Byte

Bit Definitions Status Byte Register

U t i o n

Using Service Request SRQ and Serial Poll

To Determine When a Command Sequence is Completed

Using *STB? to Read the Status Byte

To Interrupt Your Bus Controller Using SRQ

Using *OPC to Signal When Data is in the Output Buffer

How to Use the Message Available Bit MAV

Bit Definitions Standard Event Register

Standard Event Register

Scpi Status Model

Bit Definitions Questionable Data Register

Questionable Data Register

143

Status Reporting Commands

Status Reporting Commands

CLS

ESE?

OPC

ESR?

OPC?

PSC?

Calibration Commands

Calibration Commands

CALibrationSTRing?

CALibrationSTRing quoted string

CALibrationVALue value

CALibrationVALue?

RS-232 Configuration Overview

RS-232 Interface Configuration

RS-232 Interface Configuration

Even / 7 data bits, factory setting or Odd / 7 data bits

Connection to a Computer or Terminal

RS-232 Data Frame Format

RS-232 Interface Configuration

Multimeter sets the DTR line False in the following cases

DTR / DSR Handshake Protocol

RS-232 Troubleshooting

RS-232 Interface Commands

RS-232 Interface Commands

SENSe

An Introduction to the Scpi Language

An Introduction to the Scpi Language

Command Format Used in This Manual

Using the MIN and MAX Parameters

Command Separators

Scpi Command Terminators

Querying Parameter Settings

Scpi Parameter Types

IEEE-488.2 Common Commands

Type of Output Data Output Data Format

Output Data Formats

Output Data Formats

Talk only for Printers

Using Device Clear to Halt Measurements

Using Device Clear to Halt Measurements

To Set the Gpib Address

Move down a level to the HP-IB Addr command

To Set the Gpib Address

Turn on the front-panel menu

To Select the Remote Interface

Move down a level and then across to the Interface command

To Select the Remote Interface

Move down to the parameter level to select the interface

To Set the Baud Rate

Move down a level and then across to the Baud Rate command

To Set the Baud Rate

Move down to the parameter level to select the baud rate

To Set the Parity

Move down a level and then across to the Parity command

To Set the Parity

Move down to the parameter level to select the parity

To Select the Programming Language

Move down a level and then across to the Language command

To Select the Programming Language

O Menu

Alternate Programming Language Compatibility

Agilent 3478A Language Setting

Alternate Programming Language Compatibility

Fluke 8840A Description Agilent 34401A Action Command

Fluke 8840A/8842A Language Setting

Scpi Compliance Information

Scpi Compliance Information

IEEE-488.2 Common Commands

Dedicated Hardware Lines Addressed Commands

IEEE-488 Compliance Information

IEEE-488 Compliance Information

170

Error Messages

Error Messages

Execution Errors

113

Execution Errors 112

121

123

Invalid string data

Execution Errors 151

158

String data not allowed

222

Execution Errors 221

223

224

410

Execution Errors 350

420

430

502

Execution Errors 501

511

512

601

Self-Test Errors

602

603

Calibration Errors

704

Calibration Errors 703

705

706

730

Calibration Errors 725

731

732

Application Programs

Application Programs

Using MEASure? for a Single Measurement

Using MEASure? for a Single Measurement

Gpib Operation Using Basic

Gpib Operation Using QuickBASIC

Using CONFigure with a Math Operation

Using CONFigure with a Math Operation

187

On next

Using the Status Registers

Using the Status Registers

END While

Using the Status Registers Gpib Operation Using Basic

Using the Status Registers Gpib Operation Using QuickBASIC

191

RS-232 Operation Using QuickBASIC

RS-232 Operation Using QuickBASIC

RS-232 Operation Using Turbo C

RS-232 Operation Using Turbo C

194

195

196

Measurement Tutorial

Copper-to Approx. ∝V / C

Thermal EMF Errors

Loading Errors dc volts

Loading Errors dc volts

Leakage Current Errors

Rejecting Power-Line Noise Voltages

Rejecting Power-Line Noise Voltages

Digits NPLCs Integration Time

60 Hz 50 Hz

Noise Caused by Magnetic Loops

Common Mode Rejection CMR

Common Mode Rejection CMR

Noise Caused by Ground Loops

Noise Caused by Ground Loops

Resistance Measurements

Wire Ohms Measurements

Resistance Measurements

Power Dissipation Effects

Removing Test Lead Resistance Errors

Removing Test Lead Resistance Errors

Settling Time Effects

Errors in High Resistance Measurements

Errors in High Resistance Measurements

DC Current Measurement Errors

True RMS AC Measurements

True RMS AC Measurements

Ac + dc = √ ac2 + dc2

Crest Factor Errors non-sinusoidal inputs

Crest Factor Errors non-sinusoidal inputs

Bandwidth Error = C.F x F

Loading Errors ac volts

Loading Errors ac volts

Additional error for high frequencies

For low frequencies

Temperature Coefficient and Overload Errors

High-Voltage Self-Heating Errors

Measurements Below Full Scale

Measurements Below Full Scale

Low-Level Measurement Errors

Low-Level Measurement Errors

Voltage Measured = √

+ Noise

Common Mode Errors

Common Mode Errors

AC Current Measurement Errors

Making High-Speed DC and Resistance Measurements

Frequency and Period Measurement Errors

Frequency and Period Measurement Errors

Making High-Speed AC Measurements

Making High-Speed AC Measurements

Specifications

DC Characteristics

DC Characteristics

Operating Characteristics

DC Characteristics Measuring Characteristics

AC Characteristics

AC Characteristics

AC Characteristics Measuring Characteristics

Frequency and Period Characteristics

Frequency and Period Characteristics

Measurement Considerations

Frequency and Period

General Information

General Information

Product Dimensions

Product Dimensions

Range Input Level Reading Error

To Calculate Total Measurement Error

To Calculate Total Measurement Error

Ppm of input error =

Input error =

Range Input Level Range Error

100

Interpreting Multimeter Specifications

Interpreting Multimeter Specifications

Number of Digits and Overrange

Sensitivity

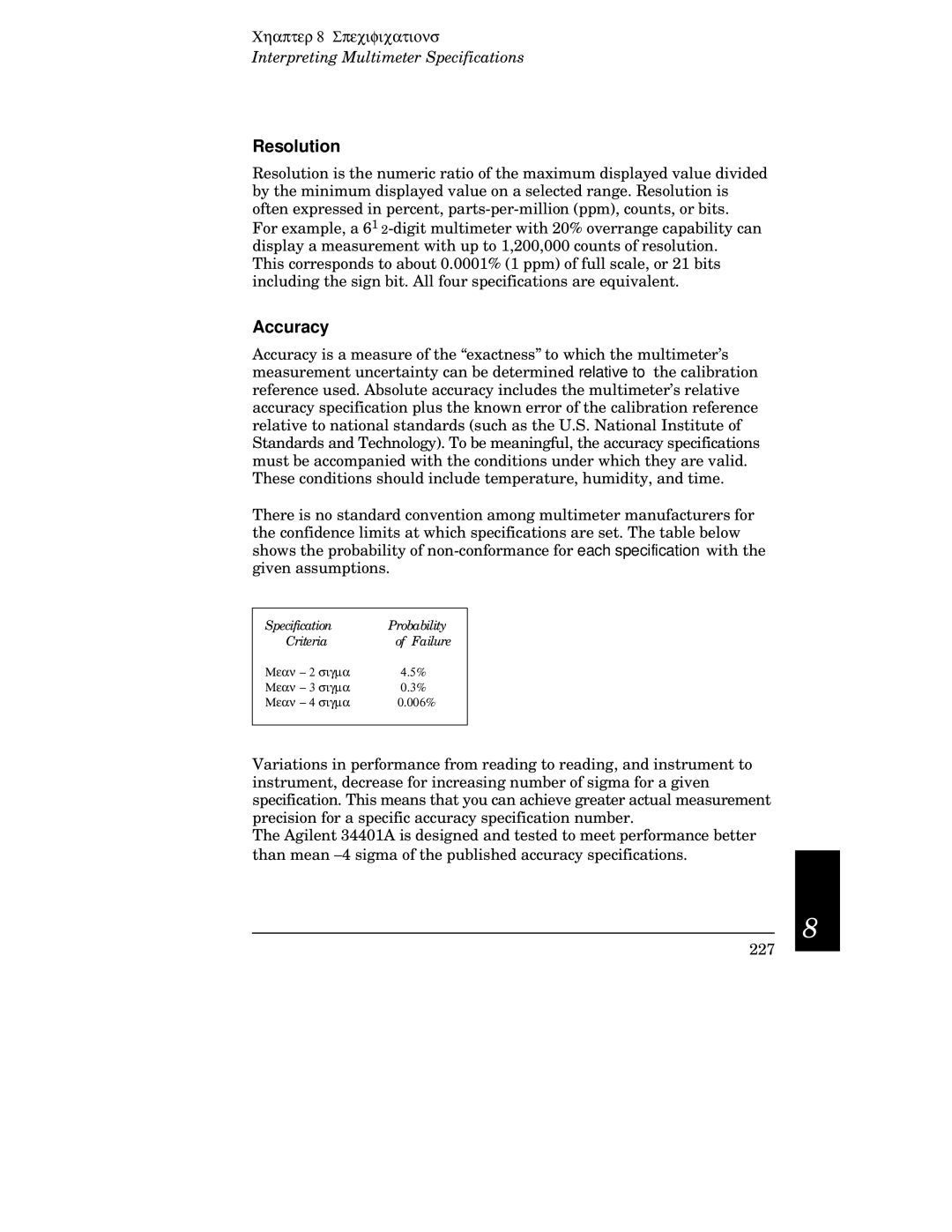

Accuracy

Specification Probability Criteria Failure

Transfer Accuracy

Temperature Coefficients

Hour Accuracy

Day and 1-Year Accuracy

Configuring for Highest Accuracy Measurements

Configuring for Highest Accuracy Measurements

230

Ext Trig, 5 VM Comp, 5

Index

Index

233

234

235

236