Load Balancing

One factor that can cause services to become unavailable is server overload. A server has limited resources and can service a limited number of requests simultaneously. If the server gets overloaded, it slows down and can eventually crash.

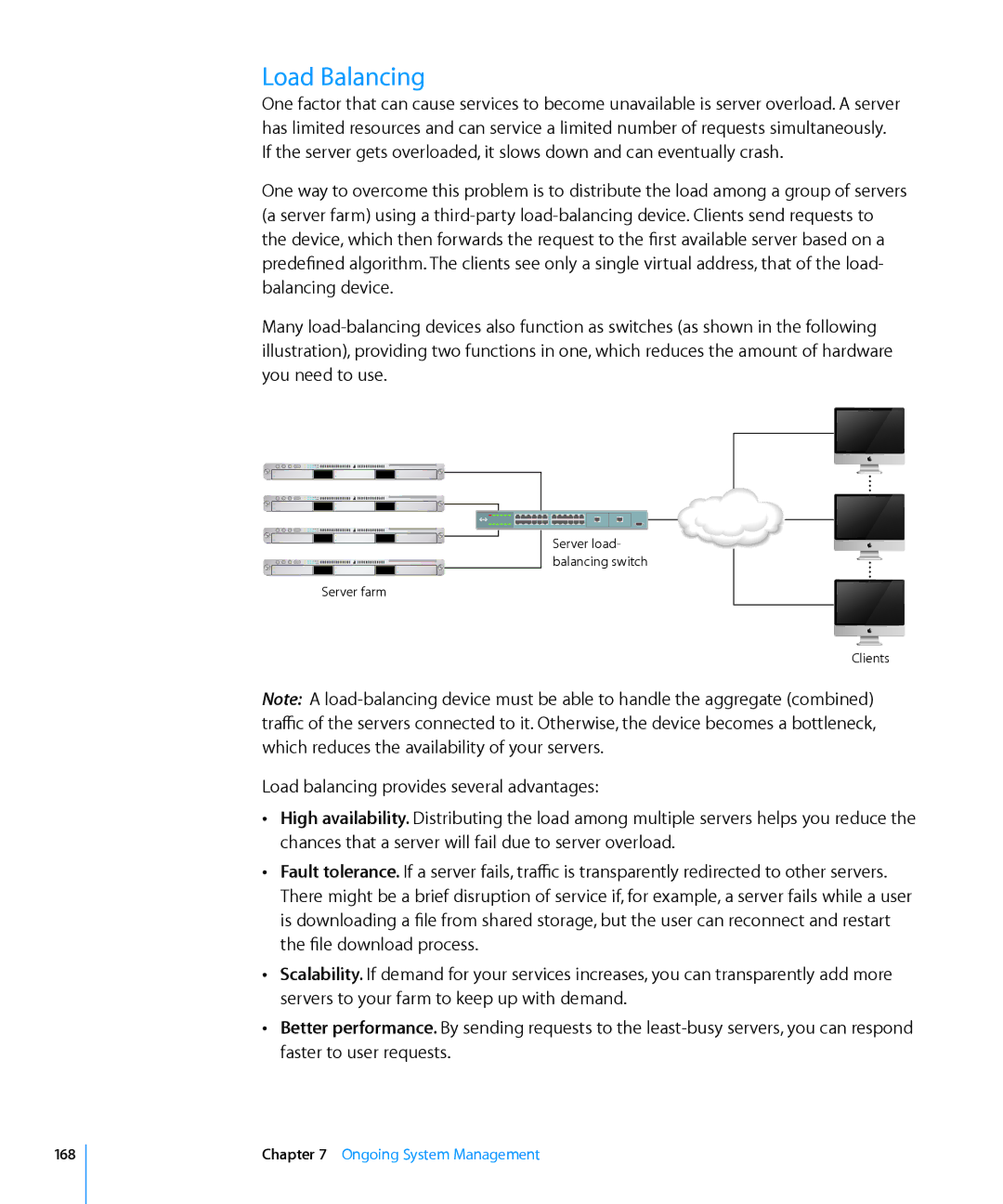

One way to overcome this problem is to distribute the load among a group of servers (a server farm) using a

Many

Server load- balancing switch

Server farm

Clients

Note: A

Load balancing provides several advantages:

ÂÂ High availability. Distributing the load among multiple servers helps you reduce the chances that a server will fail due to server overload.

ÂÂ Fault tolerance. If a server fails, traffic is transparently redirected to other servers. There might be a brief disruption of service if, for example, a server fails while a user is downloading a file from shared storage, but the user can reconnect and restart the file download process.

ÂÂ Scalability. If demand for your services increases, you can transparently add more servers to your farm to keep up with demand.

ÂÂ Better performance. By sending requests to the

168

Chapter 7 Ongoing System Management