Agilent Model 54835A/45A/46A Oscilloscopes

Trigger setup controls set mode and basic parameters

Service Policy

Ease of use with high performance

Display shows waveforms and graphical user interface

Power

Measurements

This Book

Contents

Contents

No Display Trouble Isolation

To check the keyboard To check the LEDs

To remove and replace the backlight inverter board

Replaceable Parts

To remove and replace the floppy disk drive

General Information

Model Description

Instruments covered by this service guide

Oscilloscopes Covered by this Service Guide

Accessories supplied

Accessories supplied

Option Number Description

Options available

Options available

Accessories available

Accessories available

Required when using 2 or more Agilent 1144A active probes

Agilent 1144A MHz Active Probe

Agilent

Acquisition

Specifications & characteristics

Specifications & characteristics

Specifications & characteristics Vertical

Horizontal

Offset Accuracy

Whichever is larger

Post-trigger

Specifications & characteristics Trigger

Specifications & characteristics Display

Measurements

Computer System/ Storage

Rise Time figures are calculated from tr=.35/Bandwidth

CAT I and CAT II Definitions

Video Output

General Characteristics

Agilent Technologies 54835A/45A/46A general characteristics

Agilent Technologies 54835A/45A/46A general characteristics

Agilent Technologies 54835A/45A/46A general characteristics

Agilent Service software No substitution

Recommended test equipment

Recommended test equipment

Agilent EPM Sensor 441A/Agilent 8482A

Preparing for Use

Preparing for Use

To inspect the instrument

To inspect the instrument

Three-wire Power Cable is Provided

To connect power

To connect power

To connect the mouse or other pointing device

To connect the mouse or other pointing device

To attach the optional trackball

To attach the optional trackball

To connect the keyboard

To connect the keyboard

To connect to the LAN card

To connect oscilloscope probes

To connect oscilloscope probes

To connect a printer

To connect a printer

To connect an external monitor

To connect an external monitor

To connect the Gpib cable

To tilt the oscilloscope upward for easier viewing

To tilt the oscilloscope upward for easier viewing

You Can Configure the Backlight Saver

To power on the oscilloscope

To power on the oscilloscope

Press the Default Setup key on the front panel

Graphical Interface Enable Button

To verify basic oscilloscope operation

Press the Autoscale key on the front panel

To clean the instrument

To clean the instrument

To clean the display monitor contrast filter

Testing Performance

Test Interval Dependencies

See Also

Averaging

Let the Instrument Warm Up Before Testing

Clear Display

Enable the graphical interface

Procedure

To test the dc calibrator

Connect the multimeter to the front panel Aux Out BNC

Selecting DC in the Calibration Dialog

If the test fails

Set Aux Output to DC Set the output voltage

Input Resistance Equipment Setup

To test input resistance

To test input resistance

25% accuracy Cables

Specification

To test voltage measurement accuracy

Connect the equipment

To test voltage measurement accuracy

Use the following table for steps 8 through

Voltage Measurement Accuracy Equipment Setup

Acquisition Setup for Voltage Accuracy Measurement

Source Selection for Vavg Measurement

Scale Offset Supply Tolerance Limits

Select Channel 1 as the source for the Vavg measurement

To Set Vertical Scale and Position

Set the scale from the table Set the offset from the table

To test offset accuracy

Use the following table for steps 6 through

To test offset accuracy

Volts/div Position Supply Tolerance Limits

To test offset accuracy

Specification Equivalent Time

To test bandwidth

Real Time

Equivalent Time Test

Displayed. Note the Vamptd1 reading

Calculate the response using the formula

Select Vamptd from the Voltage submenu of the Measure menu

Real Time Test

MΩ, 500 MHz Test on 54835A, 54845A, and 54846A

Equivalent Time Mode Procedure

To test time measurement accuracy

To test time measurement accuracy

Equivalent Time ≥16 averages

Horizontal Setup for Equivalent Time Procedure

Waveform for Time Measurement Accuracy Check

Acquisition Setup for Equivalent Time Procedure

Source Selection for Delta Time Measurement

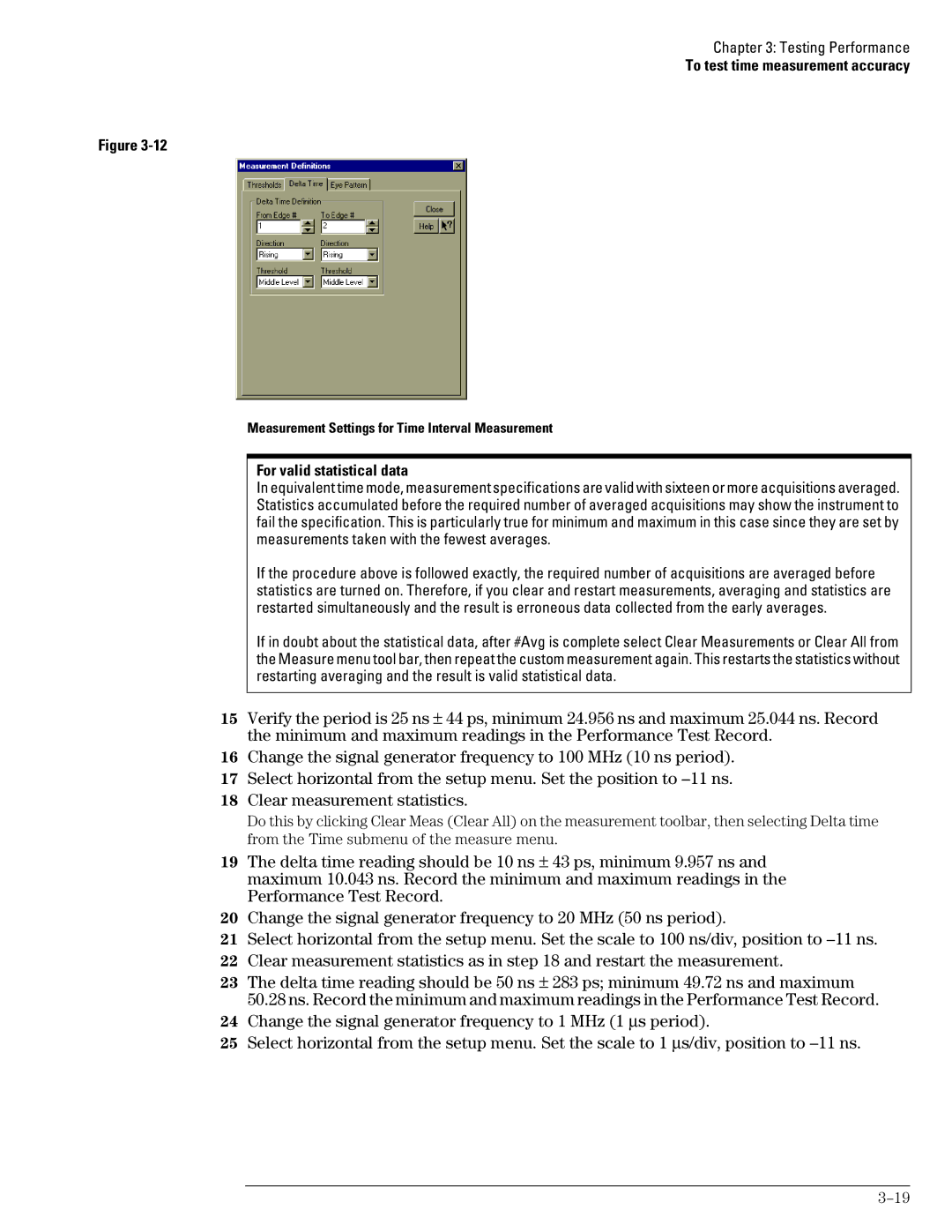

Measurement Settings for Time Interval Measurement

For valid statistical data

New Measurement Settings for Delta Time Measurement

Real-Time Mode Procedure

Measurement Definitions for Real Mode Procedure

Click Close

Acquisition Setup for Trigger Sensitivity Test

To test trigger sensitivity

Set horizontal time/div to 5 ns/div

To test trigger sensitivity

Setting the Markers for a 0.5 Division Reference

Turn on Channel 1 and turn off all other channels

Procedure-Auxiliary Trigger Test

If a test fails

Page

Agilent Technologies 54835A/45A/46A Oscilloscope

Test Limits Results Vmax Vmin/5 =

Amplitude Input Channel Chan 4/Ext Resistance

Voltage Scale Coupling Supply Limits Channel

Realtime Channel 4 GSa/s

Channel 8 GSa/s

Channel 2 GSa/s

∆Time edge#101 Limits

Calibrating and Adjusting

Calibrating and Adjusting

Let the Oscilloscope Warm Up Before Adjusting

Loading New Software

To check the power supply

Disconnect the oscilloscope power cord and remove the cover

To check the power supply

Equipment Critical Specifications Recommended Model/Part

Power Supply Voltage Limits

Supply Voltage Specification Limits

Equipment Required

Flat-Panel Display Specifications

When to Use this Procedure

To check the flat panel display FPD

To check the flat panel display FPD

Click Start

Starting the Screen Test

Repeat steps 6 and 7 for all colors

Screen Test

To run the self calibration

To run the self calibration

Self calibration

Calibration time

Click Start, then follow the instructions on the screen

Clear Cal Memory Protect to Run self calibration

If calibration fails

Click here to start calibration

Page

Troubleshooting

Troubleshooting

To install the fan safety shield

Disconnect the instrument power cord and remove the cover

To install the fan safety shield

Installing the Fan Safety Shield

To troubleshoot the instrument

To troubleshoot the instrument

Primary Trouble Isolation Flowchart

Primary Trouble Isolation

Perform power-up

Power-on Display Default Graphical Interface Disabled

Primary Trouble Isolation

Check the display

Run the Knob and Key selftest and the LED selftest

Run oscilloscope self-tests

Check the front panel

No Display Trouble Isolation Flowchart

Remove the cabinet and install the fan safety shield

No Display Trouble Isolation

Check the fan connections

Sequence

Power-on

Power supply indicator Isolation flowchart

Completed its power-on test LEDs do not come on,

Configure the motherboard

Power to the instrument.

Solenoids are preset Normal operating pattern

Not, the problem may be with

Board video signals

Power Supply Trouble Isolation Flowchart

Power Supply Trouble Isolation

Check the power supply voltages

Power Supply Trouble Isolation

Approximate Resistance Values, Each Power Supply to Ground

Replace any shorted assembly

Override the Remote Inhibit signal

Power Supply Distribution

Power-up Sense Resistor Connection

Replace the power supply

Routing of +15 V Bias and Remote Inhibit Signals

Check +15 V Bias and Remote Inhibit cabling

Check for the oscilloscope display onscreen

Pin Supply +3V Offset Data Probe ID Ring Clk +12

To check probe power outputs

To check probe power outputs

When you are finished, click Close

Test Procedure

To check the keyboard

To check the keyboard

Troubleshooting Procedure

Re-assemble the instrument

To check the LEDs

To check the LEDs

Test by Rows

LED Test Screen

When you are finished, click Close

Messages Vary Slightly

To check the motherboard, CPU, and RAM

To check the motherboard, CPU, and RAM

To check the Svga display board video signals

To check the Svga display board video signals

Signal

Video Signals

To check the backlight inverter voltages

To check the backlight inverter voltages

Backlight Inverter Board Input Voltages Input Pin #

Backlight OFF Backlight on

Post Code Listing AMI Motherboard only

Post Code Listing AMI Motherboard only

Post Code Listing

Checkpoint Code Description

Keyboard BAT command is issued

Unblocking command

Color settings next

Next

Clearing the memory below 1 MB for the soft reset next

2Eh

Display memory read/write test next

2Fh

Keyboard reset command next

Command next

Winbios Setup next

After Winbios Setup next

9Bh

Coprocessor text next

9Ch

9Dh

Motherboard / floppy drive configurations Bios setup

To configure the motherboard jumpers and set up the Bios

To configure the motherboard jumpers and set up the Bios

To configure the motherboard jumpers and set up the Bios

Agilent Technologies Service Note Describes Upgrade Details

To configure the motherboard jumpers and set up the Bios

Configuring the AMI Series 727 Motherboard Jumpers

J46

J37, J39

Series 727 Motherboard Jumpers

J25J24 J37

MHz Clock Is Required

J2J21 J11 J12

Press F1 to run the Winbios setup

To configure the AMI Series 727 Winbios Parameters

Do the following within the setup program

Winbios Keyboard Commands

System Keyboard Absent

Do steps h-l only if Your System has 64 Mb RAM

Exit the Setup program, saving the changes you made

Configuration Item Setting

Load Optimal Defaults

Primary

Configuring the AMI Series 757 Motherboard for 200 MHz CPU

Series 757 Rev C Motherboard Jumpers

Pin

Do not Press F2

When to Do this Procedure

Series 757 System Bios Settings with 1.44 Mbyte floppy drive

Configuring the AMI Series 757 Motherboard for 300 MHz CPU

Series 757 Rev D Motherboard Jumpers

J14 J16

AMI Atlas-III PCI/ISA

Do the following within the setup program

Series 757 System Bios Settings with 120 Mbyte floppy drive

FIC VA-503A Motherboard

Core S1-1 S1-2 S1-3 S1-4

Off

Ratio S2-1 S2-2

Under the Power Management Setup, change these settings

Under the PnP/PCI Configuration, change these settings

Under Integrated Peripherals, change these settings

Isolating Acquisition Problems

To troubleshoot the acquisition system

To troubleshoot the acquisition system

Acquisition Trouble Isolation 1

Acquisition Trouble Isolation 2

Acquisition Trouble Isolation 3

Acquisition Trouble Isolation 4

To D Converter Failed

Return the Known Good Attenuator to Original Channel

Temperature Sense failed

Fiso failed

Probe Board failed

Offset DAC failed

Scroll Mode Counter failed

Timer failed

Interpolator failed

Timebase failed

Attenuator Click Test

To troubleshoot attenuator failures

To troubleshoot attenuator failures

Attenuator Connectivity Test

Repeat steps 1 through 3 for the remaining input channels

Swapping Attenuators

Attenuator Assembly Troubleshooting

Enable the Graphical Interface

Software Revisions

Select About Infiniium... from the Help Menu

Software Revisions

Page

Replacing Assemblies

Replacing Assemblies

Remove all accessories from the instrument

To return the instrument to Agilent Technologies for service

Cover Fasteners

To remove and replace the cover

To remove and replace the cover

To disconnect and connect Mylar flex cables

To disconnect and connect Mylar flex cables

To disconnect the cable

To reconnect the cable

To remove and replace the AutoProbe assembly

Disconnect the power cable and remove the cover

To remove and replace the AutoProbe assembly

Disconnecting W13

Avoid Damage to the Ribbon Cable and Faceplate

Pushing Out the AutoProbe Faceplate

To remove and replace the probe power and control assembly

To remove and replace the probe power and control assembly

Removing the Probe Power and Control Assembly

Avoid Interference with the Fan

To remove and replace the front panel assembly

To remove and replace the backlight inverter board

To remove and replace the backlight inverter board

Removing the Backlight Inverter Board

Removing the BNC Nuts

To remove and replace the front panel assembly

SMB Cable W18 and Comp Wire W19

Disconnecting W16, W20, and the Backlight Cables

Front Panel Side Screws

Keep Long Screws Separate for Re-assembly

Front Panel Cover Plate Screws

Removing the Cursor Keyboard

Removing the backlights

Removing the Acquisition Assembly

To remove and replace the acquisition board assembly

To remove and replace the acquisition board assembly

To replace the board, reverse the removal procedure

To remove and replace the LAN interface board

To remove and replace the LAN interface board

Removing the LAN Interface Board

Removing the Gpib Interface Board

To remove and replace the Gpib interface board

To remove and replace the Gpib interface board

Disconnect these cables from the Svga display board

Removing the Scope Interface and Svga Display Boards

To separate the scope interface board and Svga display board

To separate the scope interface board and Svga display board

To remove and replace the Option 200 sound card

To replace the card, reverse the removal procedure

To remove and replace the Option 200 sound card

Removing the Hard Disk Drive

To remove and replace the hard disk drive

To remove and replace the hard disk drive

To remove and replace the floppy disk drive

To remove and replace the motherboard

To remove and replace the floppy disk drive

Removing the Floppy Disk Drive Screws

Removing the Motherboard Torx Screws

Disconnect these cables from the motherboard

To remove and replace the motherboard

To remove and replace the motherboard

Removing Power Supply Cables

To remove and replace the power supply

To remove and replace the power supply

To install the fan, reverse this procedure

To remove and replace the fan

To remove and replace the fan

Removing Fan Fasteners

Removing the CPU

To remove and replace the CPU

To remove and replace the CPU

To remove and replace RAM SIMMs or Sdram DIMMs

To remove and replace RAM SIMMs or Sdram DIMMs

Removing and replacing SIMMs on the AMI motherboard

Removing RAM SIMMs

Do Not Remove the Attenuators from the Acquisition Board

To remove and replace an attenuator

To remove and replace an attenuator

If You Permanently Replace Parts

Removing an Attenuator

Self Test

To reset the attenuator contact counter

To reset the attenuator contact counter

Repeat for each channel you need to change

Attenuator Relay Actuations Setup

Attenuator Channel box, select the channel you are changing

To remove the acquisition hybrid

To remove and replace an acquisition hybrid

To remove and replace an acquisition hybrid

To replace the acquisition hybrid

Do not USE Excessive Force

Hybrid Connector

Removing the Hybrid Connector

Replaceable Parts

Ordering Replaceable Parts

Listed Parts

Unlisted Parts

Direct Mail Order System

Plug Type Cable Plug Description Length Color Country In/cm

Power Cables and Plug Configurations

Power Cables and Plug Configurations

Exploded Views

Exploded Views

MP61 MP17 MP62 or MP63 MP15 and MP49

Front Frame and Front Panel

Fan and Acquisition Assembly

H12 H24 H25 MP55 MP60 H11

Power Supply and PC Motherboard

A10 MP57

Exploded Views

A4A1W24/25 A4A4

Exploded Views

Exploded Views

H23 A4A2

FIC VA-503A Motherboard for PC Motherboard Configuration

W17W20 A15 W14 W11 A19 W16 A14 W24/25 W27 W22 A4A1

Exploded Views

Sleeve and Accessory Pouch

MP14 MP52 MP70 MP72

MP12

H10 MP20 MP13 H19 H26 MP19

Attenuator Assembly

Replaceable Parts List

Replaceable Parts List

Agilent Part Des Number

QTY

DRAM-SIMM 2MX32 8 MB Simm

DRAM-DIMM 16MX32 64 MB Dimm

Fans

Cables

Display BD. Jumper

Page

Theory of Operation

Theory of Operation

Block-Level Theory

Power Supply Assembly

FPD Monitor Assembly

Acquisition System

Front Panel

Disk Drives

Attenuators

Gpib Interface Card

Probe Power and Control

Svga Display Card

Acquisition Block Diagram

Attenuator Theory

Acquisition Theory

Attenuator Theory

Acquisition Board

Acquisition Theory

Acquisition Modes

Scope Interface Board

Page

Supplementary Information

Manufacturer’s Name

Manufacturer’s Address

Product Regulations

Restricted Rights Legend

Product Warranty