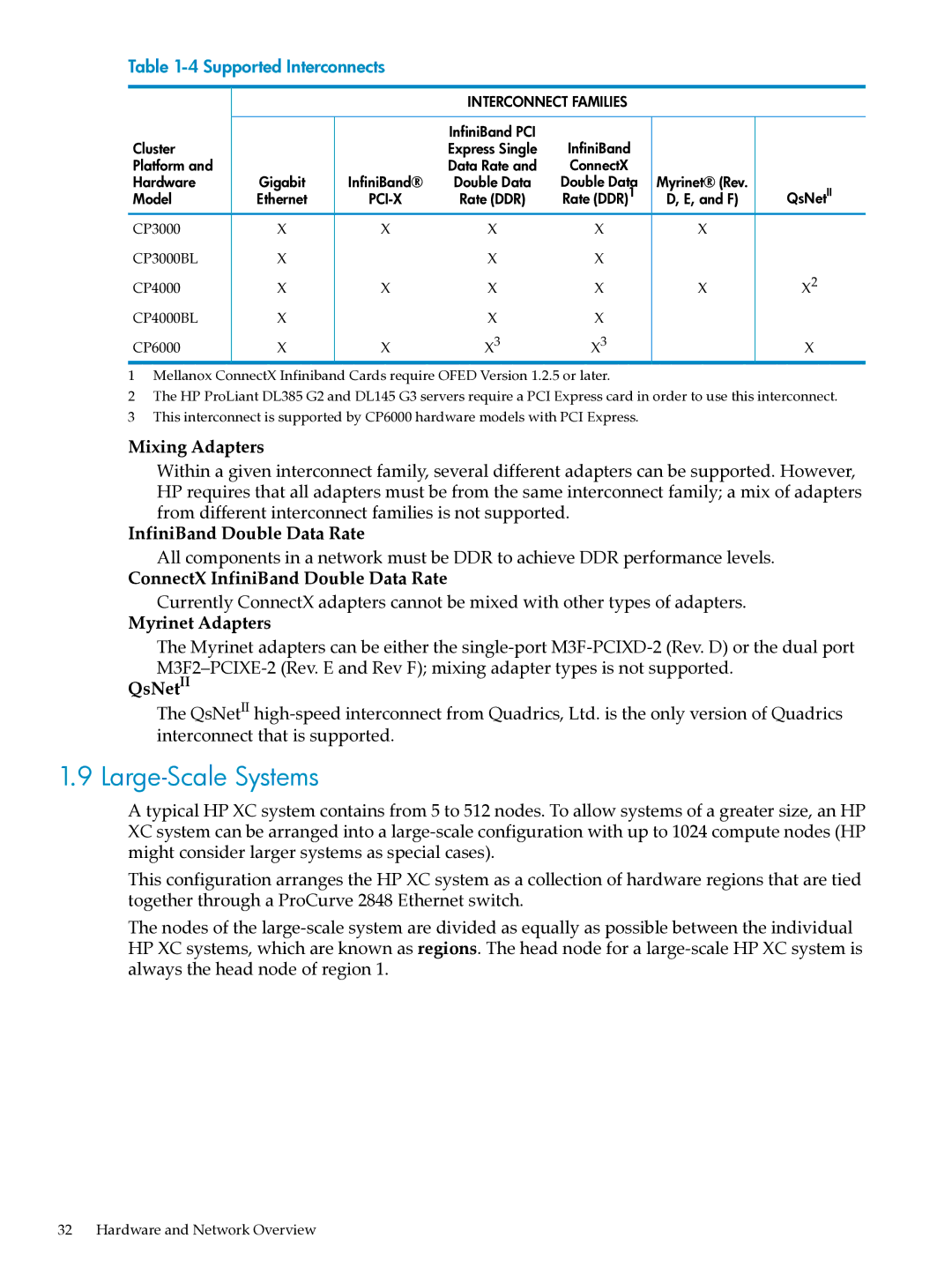

Table 1-4 Supported Interconnects

|

|

| INTERCONNECT FAMILIES |

|

| |

|

|

| InfiniBand PCI | InfiniBand |

|

|

Cluster |

|

| Express Single |

|

| |

Platform and |

|

| Data Rate and | ConnectX |

|

|

Hardware | Gigabit | InfiniBand® | Double Data | Double Data | Myrinet® (Rev. | QsNetII |

Model | Ethernet | Rate (DDR) | Rate (DDR)1 | D, E, and F) | ||

CP3000 | X | X | X | X | X |

|

CP3000BL | X |

| X | X |

|

|

CP4000 | X | X | X | X | X | X2 |

CP4000BL | X |

| X | X |

|

|

CP6000 | X | X | X3 | X3 |

| X |

1Mellanox ConnectX Infiniband Cards require OFED Version 1.2.5 or later.

2The HP ProLiant DL385 G2 and DL145 G3 servers require a PCI Express card in order to use this interconnect.

3 This interconnect is supported by CP6000 hardware models with PCI Express.

Mixing Adapters

Within a given interconnect family, several different adapters can be supported. However, HP requires that all adapters must be from the same interconnect family; a mix of adapters from different interconnect families is not supported.

InfiniBand Double Data Rate

All components in a network must be DDR to achieve DDR performance levels.

ConnectX InfiniBand Double Data Rate

Currently ConnectX adapters cannot be mixed with other types of adapters.

Myrinet Adapters

The Myrinet adapters can be either the

QsNetII

The QsNetII

1.9 Large-Scale Systems

A typical HP XC system contains from 5 to 512 nodes. To allow systems of a greater size, an HP XC system can be arranged into a

This configuration arranges the HP XC system as a collection of hardware regions that are tied together through a ProCurve 2848 Ethernet switch.

The nodes of the

32 Hardware and Network Overview