tion addresses differ, and if they do not, inserting up to 8K bytes of padding between the arrays. This rule will avoid thrashing in

Usually, this padding will mean zero extra bytes in the executable image, just a skip in virtual address space to the

For large caches, the rule above should be applied to the

Both of the rules above can be satisfied simultaneously, thus often eliminating thrashing in all anticipated

A.3.4 Sequential Read/Write — Factor of 1

All other things being equal, sequences of consecutive reads or writes should use ascending (rather than descending) memory addresses. Where possible, the memory address for a block of 2**Kbytes should be on a 2**K boundary, since this minimizes the number of different cache blocks used and minimizes the number of partially written cache blocks.

To avoid overrunning memory bandwidth, sequences of more than eight quadword load or store instructions should be broken up with intervening instructions (if there is any useful work to be done).

For consecutive reads, implementors should give first priority to prefetching ascending cache blocks and second priority to absorbing up to eight consecutive quadword load instructions (aligned on a

For consecutive writes, implementors should give first priority to avoiding read overhead for fully written aligned cache blocks and second priority to absorbing up to eight consecutive quadword store instructions (aligned on a

A.3.5 Prefetching — Factor of 3

Prefetching can be directed toward a cache block (a cache line) in the primary cache.

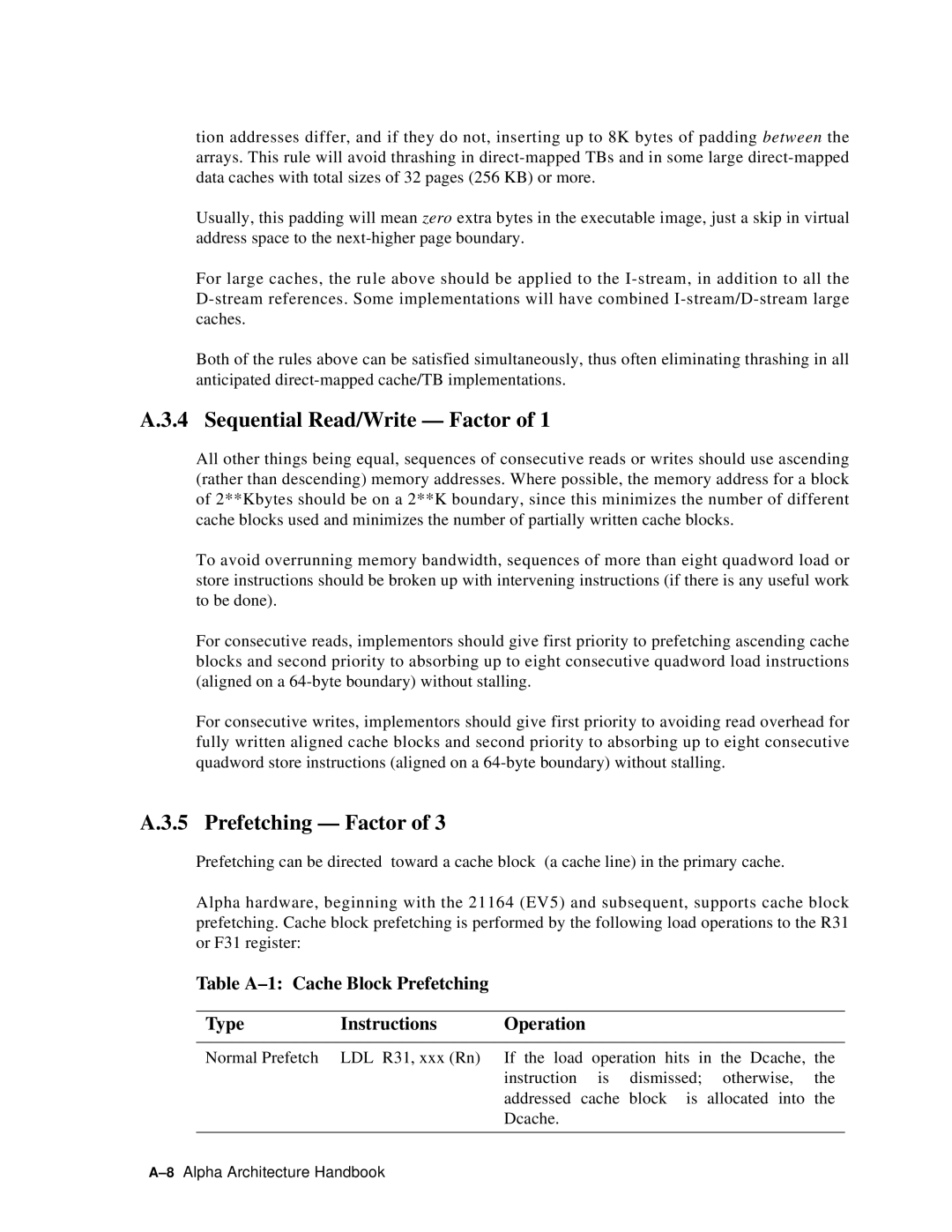

Alpha hardware, beginning with the 21164 (EV5) and subsequent, supports cache block prefetching. Cache block prefetching is performed by the following load operations to the R31 or F31 register:

Table

Type | Instructions | Operation |

|

|

| |

|

|

|

| |||

Normal Prefetch | LDL R31, xxx (Rn) | If the load operation hits in the Dcache, | the | |||

|

| instruction | is | dismissed; | otherwise, | the |

|

| addressed | cache | block is | allocated into | the |

|

| Dcache. |

|

|

|

|

|

|

|

|

|

|

|