CACHE SUBSYSTEMS

| 31 | 24 | 23 | 2 | 1 | 0 | |

I | CACHE/DRAM | I | TAG |

| BYTE | ||

PROCESSOR |

| SELECT | I | ||||

ADDRESS | _ |

|

| _ |

|

|

|

1 _ 16 MEGABYTE DRAM ~ 24 BITS_I

TAG ~ | DATA ~ |

22 BITS | 4 BYTES |

FFFFFC | 24682468 |

000000 | 12345678 |

FFFFF4 | 33333333 |

-

-

-

-

DATA

24682468 FFFFFC

11223344 FFFFF8

33333333 FFFFF4

1633AO

87654321 16339C

163398

16339C | 87654321 |

| |

FFFFF8 | 11223344 | OOOOOC | |

.... L...___'" |

| ||

2816 BIT SRAM | 4096 BIT SRAM | 000008 | |

000004 | |||

|

| ||

| 12345678 | 000000 |

16 MEGABYTE DRAM

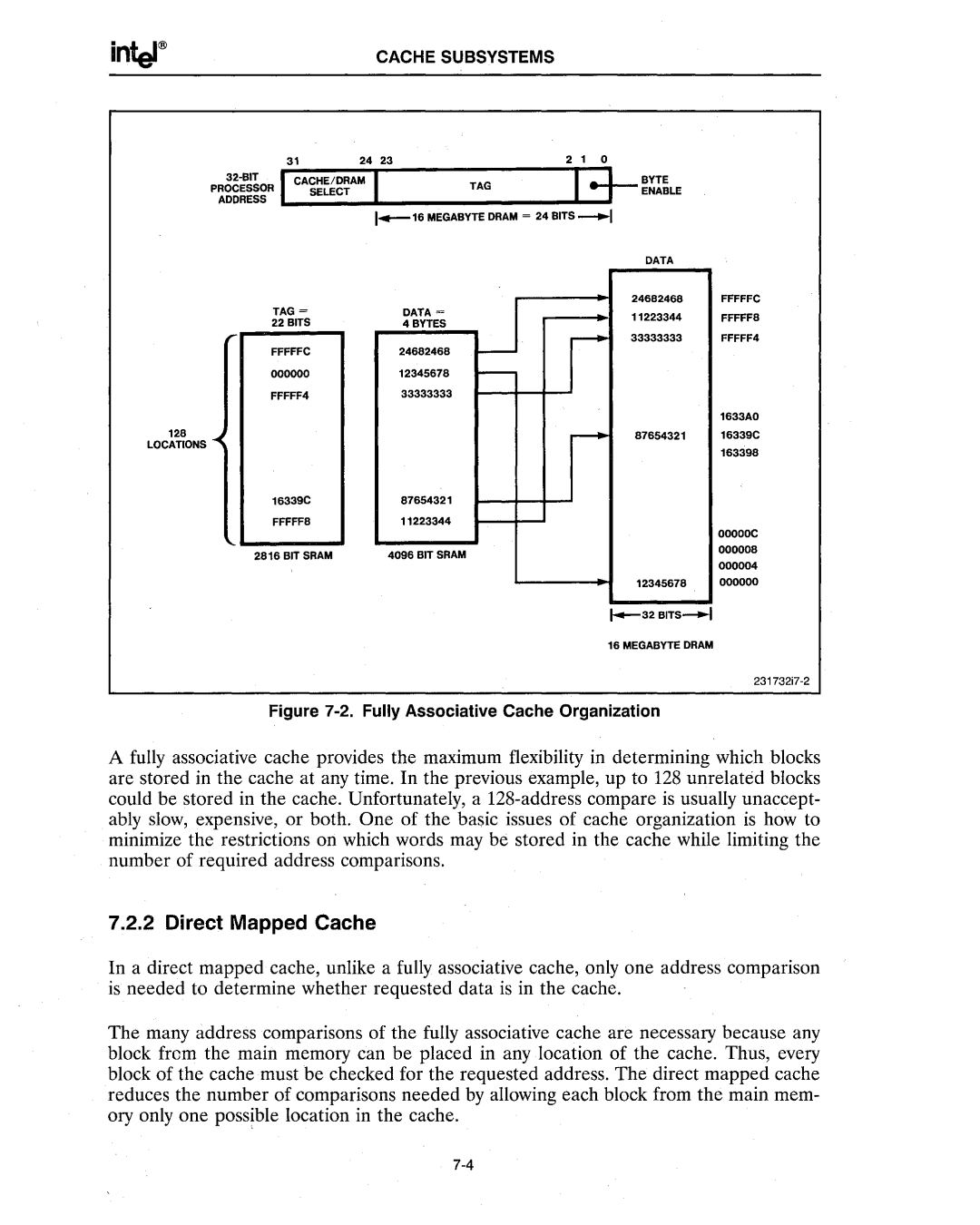

Figure 7-2. Fully Associative Cache Organization

A fully associative cache provides the maximum flexibility in determining which blocks are stored in the cache at any time. In the previous example, up to 128 unrelated blocks could be stored in the cache. Unfortunately, a

7.2.2 Direct Mapped Cache

In a direct mapped cache, unlike a fully associative cache, only one address comparison is needed to determine whether requested data is in the cache.

The many address comparisons of the fully associative cache are necessary because any block frem the main memory can be placed in any location of the cache. Thus, every block of the cache must be checked for the requested address. The direct mapped cache reduces the number of comparisons needed by allowing each block from the main mem- ory only one possible location in the cache.